Analyzing and Navigating Electronic Theses and Dissertations

Aman Ahuja

University:

Degree:

Dissertation submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science and Applications

Committee:

Edward A. Fox, Chair Chris North Lifu Huang Eugenia Rho Wei Wei

Date:

June 12, 2023

Abstract

Degree:

Dissertation submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science and Applications

Committee:

Edward A. Fox, Chair Chris North Lifu Huang Eugenia Rho Wei Wei

Date:

June 12, 2023

Abstract

Dissertation submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science and Applications

Committee:

Edward A. Fox, Chair Chris North Lifu Huang Eugenia Rho Wei Wei

Date:

June 12, 2023

Abstract

Committee:

Edward A. Fox, Chair Chris North Lifu Huang Eugenia Rho Wei Wei

Date:

June 12, 2023

Abstract

Edward A. Fox, Chair Chris North Lifu Huang Eugenia Rho Wei Wei

Date:

June 12, 2023

Abstract

Date:

June 12, 2023

Abstract

June 12, 2023

Abstract

Abstract

Electronic Theses and Dissertations (ETDs) contain valuable scholarly information that can be of immense value to the scholarly community. Millions of ETDs are now publicly available online, often through one of many digital libraries. However, since a majority of these digital libraries are institutional repositories with the objective being content archiving, they often lack end-user services needed to make this valuable data useful for the scholarly community. To effectively utilize such data to address the information needs of users, digital libraries should support various end-user services such as document search and browsing, document recommendation, as well as services to make navigation of long PDF documents easier. In recent years, with advances in the field of machine learning for text data, several techniques have been proposed to support such end-user services. However, limited research has been conducted towards integrating such techniques with digital libraries. This research is aimed at building tools and techniques for discovering and accessing the knowledge buried in ETDs, as well as to support end-user services for digital libraries, such as document browsing and long document navigation. First, we review several machine learning models that can be used to support such services. Next, to support a comprehensive evaluation of different models, as well as to train models that are tailored to the ETD data, we introduce several new datasets from the ETD domain. To minimize the resources required to develop high quality training datasets required for supervised training, a novel AI-aided annotation method is also discussed. Finally, we propose techniques and frameworks to support the various digital library services such as search, browsing, and recommendation. The key contributions of this research are as follows: • A system to help with parsing long scholarly documents such as ETDs by means of object-detection methods trained to extract digital objects from long documents. The parsed documents can be used for further downstream tasks such as long document navigation, figure and/or table search, etc. • Datasets to support supervised training of object detection models on scholarly doc- uments of multiple types, such as born-digital and scanned. In addition to manually annotated datasets, a framework (along with the resulting dataset) for AI-aided anno- tation also is proposed. • A web-based system for information extraction from long PDF theses and dissertations, into a structured format such as XML, aimed at making scholarly literature more accessible to users with disabilities. • A topic-modeling based framework to support exploration tasks such as searching and/or browsing documents (and document portions, e.g., chapters) by topic, docu- ment recommendation, topic recommendation, and describing temporal topic trends.

Electronic Theses and Dissertations (ETDs) contain valuable scholarly information that can be of immense value to the research community. Millions of ETDs are now publicly available online, often through one of many online digital libraries. However, since a majority of these digital libraries are institutional repositories with the objective being content archiving, they often lack end-user services needed to make this valuable data useful for the scholarly community. To effectively utilize such data to address the information needs of users, digital libraries should support various end-user services such as document search and browsing, document recommendation, as well as services to make navigation of long PDF documents easier and accessible. Several advances in the field of machine learning for text data in recent years have led to the development of techniques that can serve as the backbone of such end-user services. However, limited research has been conducted towards integrating such techniques with digital libraries. This research is aimed at building tools and techniques for discovering and accessing the knowledge buried in ETDs, by parsing the information contained in the long PDF documents that make up ETDs, into a more compute-friendly format. This would enable researchers and developers to build end-user services for digital libraries. We also propose a framework to support document browsing and long document navigation, which are some of the important end-user services required in digital libraries.

I would like to express my heartfelt gratitude and appreciation to the following individuals and organizations who have played a pivotal role in the completion of this thesis: First and foremost, I am deeply indebted to my advisor, Dr. Edward A. Fox, for his unwaver- ing guidance, invaluable insights, and continuous support throughout the research process. His expertise and dedication have been instrumental in shaping the direction and quality of this work. I extend my sincere thanks to the members of my thesis committee, Dr. Chris North, Dr. Lifu Huang, Dr. Eugenia Rho, and Dr. Wei Wei, for their valuable feedback, construc- tive criticism, and scholarly contributions. Their expertise and scholarly perspectives have greatly enriched the content of this thesis. I am grateful to the undergraduate students, Alan Devera, Kecheng Zhu, Jiangyue Li, Zachary Gager, You Peng, Shelby Neal, Andrew Leavitt, Annie Tran, Brian Dinh, Kevin Dinh, Jiayue Lin, Kevin Liu, Mingkai Pang, Theodore Gunn, Zehua Zhang, Luke Wev- ley, Michael Nader, Elizabeth Keegan, and Gabrielle Nguyen, as well as graduate students, Chenyu Mao and Nirmal Amirthalingam, who have actively participated in this research. Their dedication, hard work, and insightful discussions have significantly contributed to the success of this thesis. I would also like to express my thanks to William A. Ingram and the University Libraries for their invaluable support throughout the research process. Their resources, access to ma- terials, and assistance in navigating academic databases have been indispensable. A special mention goes to the members of the Digital Library Research Laboratory for their technical support and collaboration. Their expertise, assistance, and helpful discussions have

contributed to the development and refinement of the research methodology and implemen- tation. I am deeply grateful to my family and friends for their unwavering support, encouragement, and understanding throughout this journey. Their belief in my abilities and constant moti- vation have been crucial in overcoming challenges and staying focused on the thesis. While the list of individuals mentioned here is not exhaustive, each and every one of them has played a significant role in the completion of this thesis. I am truly grateful for their support and contributions.

Chapter 1 Introduction

1.1 Background and Motivation

Scholarly documents like ETDs contain important research findings, which are of value to a diverse group of users from the scholarly community. Examples of such users include students and researchers who want to review work related to their research area, as well as librarians and university administrators who want an overview of recent research in their institutions. With the vast amount of research being conducted across a variety of domains, millions of ETDs are now publicly available online. However, digital library services for ETDs have not evolved past simple search and browse at the metadata level, thus rendering the vast amount of information from these documents underutilized. In recent years, advances have been made in NLP-based techniques such as topic modeling, question-answering and text summarization, which might be incorporated to make ETDs more accessible. However, a majority of these documents exist as PDF files, and are often long and filled with highly specialized details. While some tools can work with these files, the results we have observed have been poor; other tools require data in a structured format such as XML. Accordingly, there is a need to build electronic infrastructure that can leverage the rich scholarly information contained within ETDs and make it accessible to the wider community.

1.2 Problem Statement

This thesis aims to develop methodologies that can support making the knowledge contained in ETDs more accessible for digital library users. Although a comprehensive digital library system should ideally support multiple end-user services, like browsing, search, retrieval, and question-answering, the foremost requirement for any such service is to have data available in a structured, machine-friendly format such as XML. Hence, a major contribution of this thesis would be a framework to parse ETDs in PDF to structured formats like XML. The parsed document can then be employed for training models for supporting end-user services. It can also be helpful in making long documents more accessible by breaking them down into multiple smaller components like chapters and sections. Moreover, structured representations such as XML can be used to develop web-based systems, which have better compatibility with accessibility tools such as on-screen readers, thus allowing those with disabilities to access this information. Given the recent success of object detection models in document layout analysis, we will take the object detection approach for this work. We will develop methodologies that can address several challenges that arise in the process of parsing long PDF documents. These include limited availability of training data, heterogeneity in document types, the imbalanced number of elements in the various classes, and the resource-intensive nature of dataset annotation. Unfortunately, there is no mechanism for parsing extracted objects to determine relationships among them, and converting them into a structured format to make them accessible to users with special needs. We also will investigate how this parsed information can be used for downstream tasks. Re- garding the scope of this work, we will focus on techniques that can be helpful in navigating and browsing documents from a digital library. For example, consider that the most intuitive way of making a browsing system is to group items by categories. Users can select a cate-

gory of their preference and browse the respective documents. However, in case of scholarly documents, grouping documents by research areas is a non-intuitive task, since many doc- uments only contain subject/department level information, which is often very high level. It is hard to classify documents based on pre-defined categories, due to the absence of a unified list of categories and the datasets essential for training such models. Hence, we will study unsupervised methods such as topic modeling for this task. The resultant topics or categories, as well as their respective documents, can then be used for supporting document browsing by research area in a digital library.

1.3 Research Hypotheses

The central hypotheses of this research are listed below: • Object detection based document layout analysis methods for long scholarly docu- H1: ments, trained on high quality domain-specific labeled data, perform better than those trained on a larger dataset originating from other related domains, such as research papers. • Pre-training on other scholarly datasets, albeit from a different domain such as H2: research papers, improves the performance of document layout analysis methods on long scholarly documents such as ETDs. • Training on derived datasets, such as augmented versions of the original training H3: data, can significantly improve the performance of layout analysis models. • To perform well on other document types, such as scanned documents, models H4: trained on a specific type of documents, such as born-digital ones, require additional training using techniques, like augmentation, that help bridge the distribution gap. • AI-aided annotation methods, such as using models trained on existing smaller H5:

datasets to extract weak labels for unlabeled data, reduce the resources required for annotating additional data. • Models trained on datasets with skewed distributions in terms of class labels H6: achieve better performance on minority classes when trained on additional data from those classes, such as from AI-aided annotation methods. • Combining the predictive power of AI models with rules formulated based on H7: domain expertise possessed by humans reduces errors in predictive tasks such as doc- ument structure parsing. • Neural topic models can outperform other traditional topic models, such as LDA, H8: while doing topic modeling on scholarly documents such as ETDs and their chapters.

1.4 Research Questions

Based on the hypotheses listed above, the work proposed in this thesis will focus on the following research questions: • What are the different elements that are important in an ETD that can be helpful R1: for training machine learning models for downstream tasks like searching, browsing, question-answering, etc.? How can we develop a dataset that can support training supervised machine learning models to extract these elements from an ETD? • Are datasets from other related domains, such as research papers, sufficient to R2: train layout analysis methods for ETDs? How can these datasets benefit layout analysis methods for ETDs, when used in conjunction with domain specific datasets? • What type of augmentation strategies can be used to derive more training data R3: for object detection models? How can we use augmented datasets to improve the performance of object detection models?

• Can document analysis methods trained on documents of one type, such as digital R4: PDF documents, facilitate document analysis on other types of documents, such as scanned documents? • How can annotation methods utilize the power of models trained on existing R5: datasets, to reduce the resources required in the annotation process? • How can we improve the performance of machine learning models, especially on R6: minority classes, using datasets developed using AI-aided annotation? • How can domain expertise, such as a set of rules about syntax and structure R7: that are known to domain experts, be used to develop a set of post-processing rules, which when used in combination with machine learning methods, improve the process of document layout analysis? • Can neural topic models outperform traditional topic models such as LDA, on R8: commonly used topic evaluation metrics, such as coherence and topic diversity?

1.5 Overview of Chapters

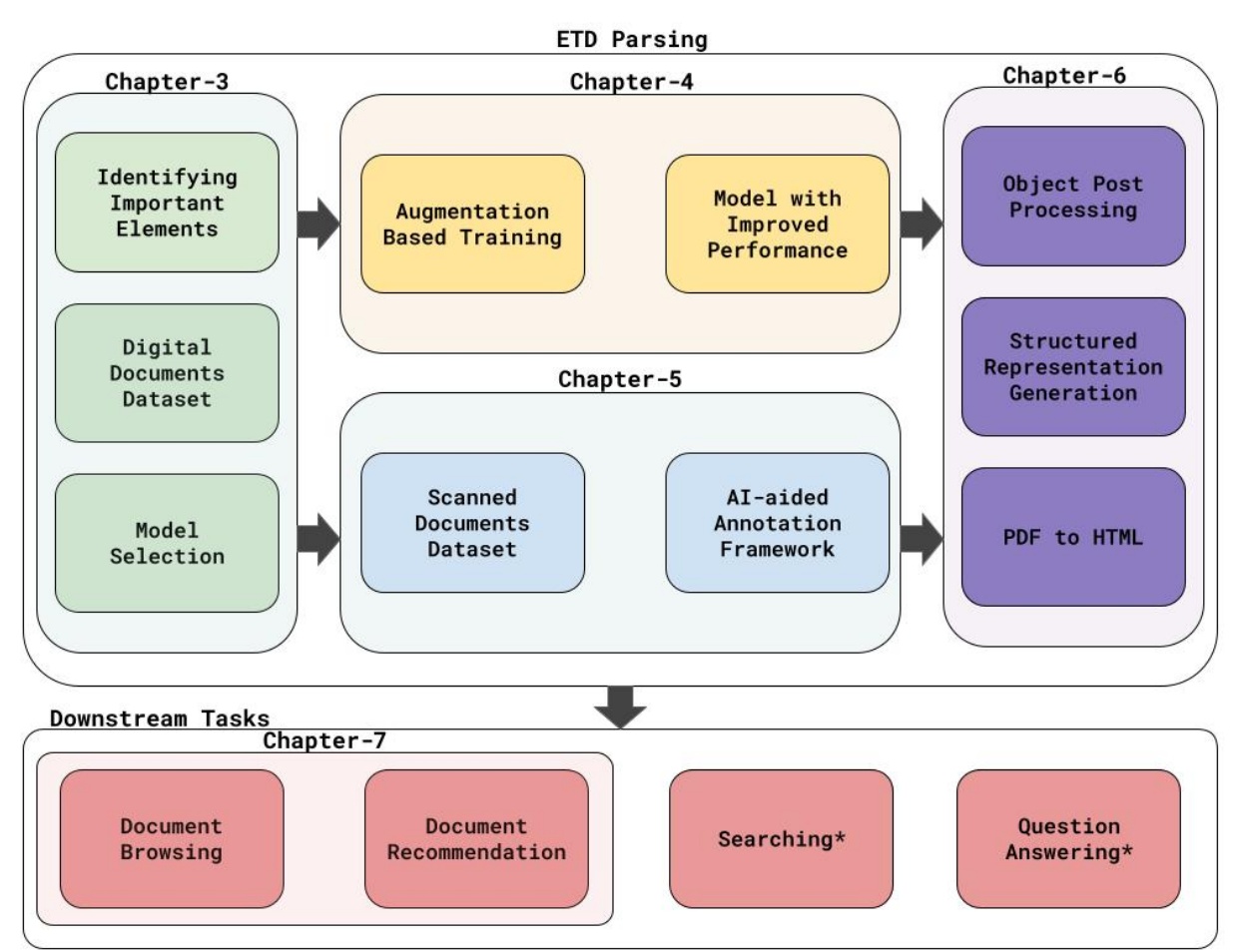

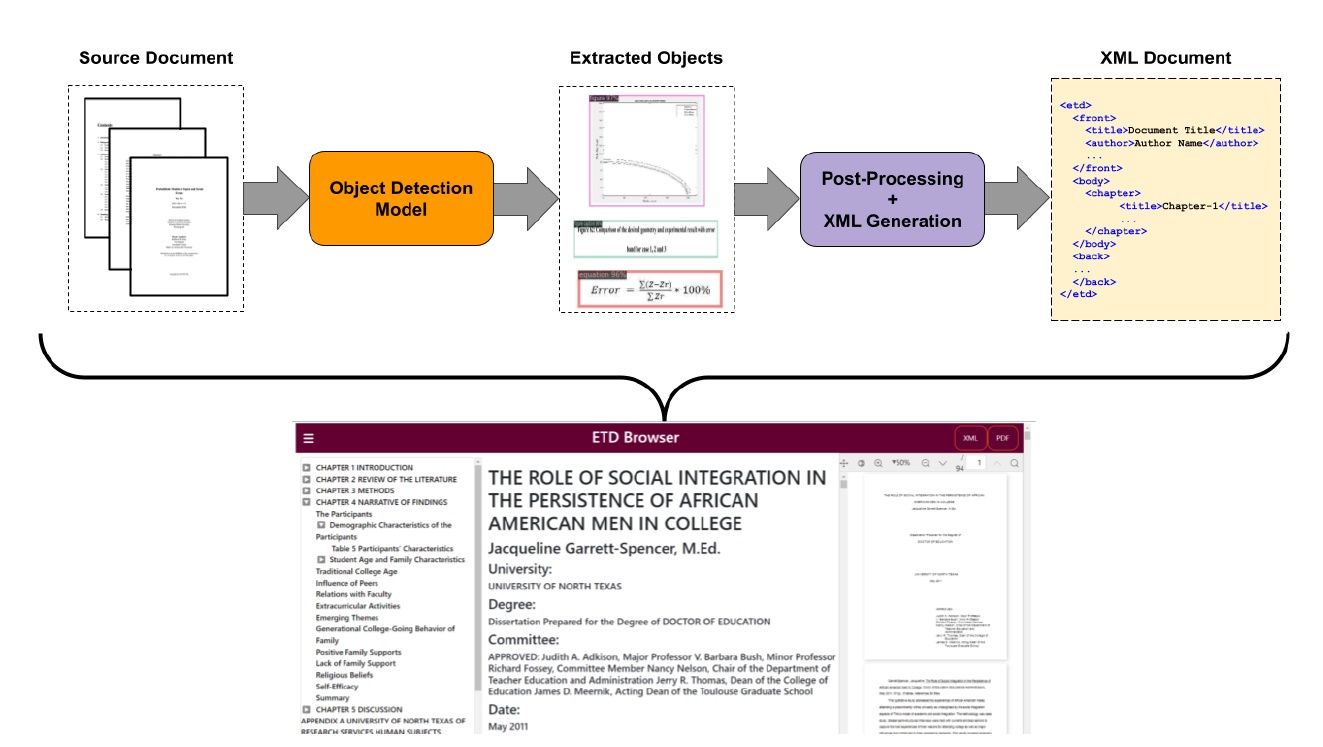

Figure 1.1 gives a high-level overview of different chapters proposed in this thesis, along with their respective contributions. The rest of this research is organized as follows: • Chapter 2 outlines some of the important techniques and datasets related to the work proposed in this thesis. • Chapter 3 introduces a list of important elements commonly found in ETDs, and a new dataset for training document layout analysis models on ETDs. It also describes training for object detection, and an evaluation of related models. • Chapter 4 proposes an augmentation-based training approach for object detection mod- els. Experimental results showing how co-training on augmented data alongside orig-

inal data can improve the performance of object detection models on layout analysis, are also presented. • Chapter 5 introduces an AI-assisted framework for annotating object detection data to improve the performance of layout analysis methods on minority classes. A new dataset to support layout analysis of scanned ETDs, as well as to improve extraction of low-frequency elements such as metadata and algorithms, is also presented. • Chapter 6 proposes a parsing framework to generate structured representations of long scholarly documents using the set of objects derived from object detection models. • Chapter 7 introduces a framework for utilizing the elements extracted from document layout parsing, for downstream tasks such as browsing and recommendation, by means of topic modeling.

1.6 Author’s Prior Work and Publications

During the course of the doctoral program, the author of this proposal has published several peer-reviewed papers in the domains of retrieval and ranking, question-answering, and topic modeling. These are listed below. Entries 1-4 relate closely to this dissertation, and the contributions of the co-authors thereof are hereby acknowledged. 1. Satvik Chekuri, Prashant Chandrasekar, Bipasha Banerjee, Sung Hee Park, Nila Mas- rourisaadat, William A. Ingram and Edward A. Fox, “Integrated Dig- Aman Ahuja, ital Library System for Long Documents and their Elements.” In Proceedings of the 23rd ACM/IEEE-CS Joint Conference on Digital Libraries (JCDL 2023). 2. Kevin Dinh, Brian Dinh, William A. Ingram, and Edward A. Fox. Aman Ahuja, “A New Annotation Method and Dataset for Layout Analysis of Long Documents.” In Companion Proceedings of the ACM Web Conference 2023, pp. 834-842. 2023,

https://doi.org/10.1145/3543873.3587609. 3. Alan Devera, and Edward A. Fox, “Parsing Electronic Theses and Aman Ahuja, Dissertations Using Object Detection.” In Proceedings of the First Workshop on In- formation Extraction from Scientific Publications (WIESP 2022, held in conjunction https://aclanthology.org/2022.wiesp-1.14.pdf. with AACL-IJCNLP 2022), 4. Chenyu Mao, William A. Ingram, and Edward A. Fox, “Analyzing Aman Ahuja, and Navigating ETDs Using Topic Models.” In The Journal of Electronic Theses and (to appear). Dissertations

5. Ming Zhu, Da-Cheng Juan, Wei Wei, and Chandan K. Reddy. “Ques- Aman Ahuja, tion Answering with Long Multiple-Span Answers.” In Findings of the Association for pp. 3840-3849. 2020. Computational Linguistics: EMNLP 2020, 6. Nikhil Rao, Sumeet Katariya, Karthik Subbian, and Chandan K. Aman Ahuja, Reddy. “Language-Agnostic Representation Learning for Product Search on E-Commerce Platforms.” In Proceedings of the 13th International Conference on Web Search and pp. 7-15. 2020. Data Mining, 7. Xuan Zhang, Zhilei Qiao, Weiguo Fan, Edward A. Fox, and Chandan Aman Ahuja, K. Reddy. “Discovering Product Defects and Solutions from Online User Generated Contents.” pp. 3441-3447. 2019. In The World Wide Web Conference, 8. Ming Zhu, Wei Wei, and Chandan K. Reddy. “A Hierarchical Atten- Aman Ahuja, tion Retrieval Model for Healthcare Question Answering.” In The World Wide Web pp. 2472-2482. 2019. Conference, 9. Ashish Baghudana, Wei Lu, Edward A. Fox, and Chandan K. Reddy. Aman Ahuja, “Spatio-Temporal Event Detection from Multiple Data Sources.” In Pacific-Asia Con- pp. 293-305. Springer, Cham, ference on Knowledge Discovery and Data Mining, 2019. 10. Vineeth Rakesh, Weicong Ding, Nikhil Rao, Yifan Sun, and Chandan Aman Ahuja, K. Reddy. “A Sparse Topic Model for Extracting Aspect-Specific Summaries from Online Reviews.” pp. 1573- In Proceedings of the 2018 World Wide Web Conference, 1582. 2018. 11. Wei Wei, Wei Lu, Kathleen M. Carley, and Chandan K. Reddy. “A Aman Ahuja, Probabilistic Geographical Aspect-Opinion Model for Geo-tagged Microblogs.” In 2017 pp. 721-726. IEEE, 2017. IEEE International Conference on Data Mining (ICDM),

Chapter 2 Review of Literature

2.1 Document Layout Analysis: Datasets

With the growing interest in using object detection based methods for document layout anal- ysis, several datasets have been introduced. Many of these datasets focus on specific object types. For instance, TableBank [23], ScanBank [19], and MFD [3] consist of tables, figures, and equations, respectively. Several datasets that consist of a diverse set of objects have also been introduced. HJDataset [34] consists of historical Japanese documents. PRImA [4] consists of document images from magazines and research papers. PubLayNet [47] is based on PDF articles from PubMed Central. The number of different objects, however, is limited in these datasets. DocBank [24] is a large dataset that consists of a diverse set of objects from research papers. But given the differences between research papers and long documents such as ETDs, models trained on DocBank do not generalize well to ETDs.

2.2 Document Layout Analysis: Annotation Methods

Due to the intensive nature of dataset annotation in terms of time and cost, researchers have proposed several techniques to annotate training datasets for object detection models. For PDF documents with an accompanying MS-Word, XML, or LaTeX file, automatic extraction based on tags is possible [23, 24]. However, in the case of scanned documents, existing rule-

based approaches do not yield high-quality results. In such cases, techniques have been explored that can help annotators, or guide them in annotating samples about which the model is most uncertain [48].

2.3 Document Layout Analysis: Techniques

Early works in the domain of document layout understanding used rule-based approaches [14, 22]. Other approaches, e.g., GROBID [26] and CERMINE [38], designed for parsing scientific documents, primarily focused on short documents such as research papers, and use an ensemble of sequence labeling methods for document parsing. With the advent of deep-learning based object detection methods such as Fast-RCNN [12], Faster-RCNN [32], and YOLO [30, 40], document layout analysis based on object detection has been proposed. LayoutParser [35] uses object detection models that have been pre-trained on different ob- ject detection datasets to support layout understanding. However, since it primarily uses research-paper based datasets, it doesn’t perform well on ETDs. Moreover, the number of object types it supports is very limited. More recently, layout-based language models [17, 45, 46] have been proposed. This line of work uses a multimodal architecture, i.e., a combination of visual and textual features, to pre-train the model on a large corpus of un- labeled data consisting of document images and their corresponding text. Although these models can then be fine-tuned on other downstream tasks such as object detection, they still require domain-specific annotated data for fine-tuning. Recently, to make the documents more accessible, services such as SciA11y [41] have been developed. However, their scope is limited to research papers, rather than long documents such as books and ETDs.

2.4 Analysis of ETDs

With the growing number of ETDs that are publicly available on the web, techniques aimed at analyzing ETDs have also gained interest in the research community. [39] proposes a framework for automatic crawling of ETDs from public repositories, as well as the resultant corpus of ETDs. An important line of work in the analysis of ETDs aims to extract elements, such as metadata [8, 9], URLs [33], etc. [29] proposes an XML schema for ETDs in a digital library.

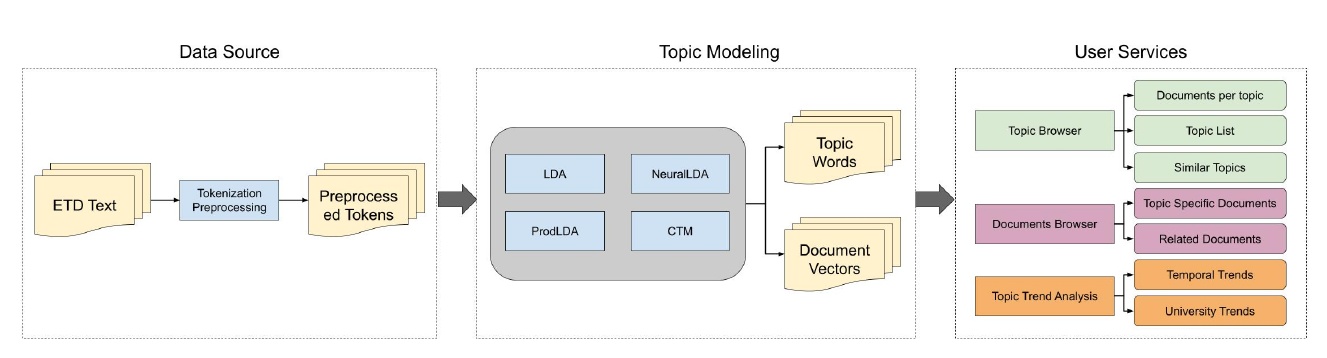

2.5 Topic Modeling

Topic modeling has been widely studied in the domain of text mining to discover latent topics. One of the earliest methods to discover topics in text documents was probabilistic Latent Semantic Indexing (pLSI) [16]. However, since pLSI was based on the likelihood principle and did not have a generative process, it cannot assign probabilities to new documents. This was alleviated by Latent Dirichlet Allocation (LDA) [6], which models each document as a mixture over topics, and topics as a mixture over words. With advances in the field of deep learning, neural topic models have gained increasing interest. Neural Variational Document Model (NVDM) [27] is a neural topic model that uses an unsupervised generative model based on Variational Autoencoders (VAE) [21]. Several other topic models that use a VAE-based architecture have been proposed [10, 28, 36]. More recently, pre-trained language models like BERT [20] and RoBERTa [25] have shown significant performance improvements in many NLP-related tasks due to their ability to learn contextualized representations of text. Consequently, several topic models that incorporate the representations from pre-trained language models have been proposed. BERTopic [13]

uses a clustering-based approach to first cluster documents based on their language model extracted representations, and then extracts the most representative words, i.e., topics, for each cluster using a TF-IDF based approach. In this process, however, the topics are not learnt, and are rather extracted using a post-processing mechanism. Contextualized Topic Model (CTM) [5] proposed an end-to-end learnable architecture that uses language model derived representations from Sentence-BERT [31] along with bag-of-words embeddings, in a VAE-based architecture similar to ProdLDA [36].

Chapter 3 Parsing Long PDF Documents Using Object Detection

3.1 Chapter Overview

In this chapter, we propose a set of elements in an ETD that are important for downstream tasks like searching, browsing, question-answering, etc. We also introduce ETD-OD, a new object detection dataset that contains over 25K page images originating from 200 ETDs, consisting of elements that can be important sources of information in an ETD. Finally, we investigate the performance of various state-of-the-art object detection models for document layout understanding on ETDs using the proposed dataset.

3.2 ETD Elements

Historically, ETDs do not conform to a universally accepted format, since different colleges and universities have their own specific standards and requirements for ETDs. In this section we discuss the elements that are typically found in ETDs and would be important to extract for further analysis and downstream tasks. This list was curated after extensive discussions with digital librarians and researchers. We broadly categorize the different elements of ETDs

into the following two-level taxonomy, i.e., set of broad and narrower classes.

3.2.1 Metadata

The metadata consists of elements that contain unique identifiable information about an ETD, including information found on the front page. Key metadata elements are: • The main title of the document. Title: • Name of the document author. Author: • Date (or month/year) when the document was published. Date: • University/institution of the author. University: • Committee that approved the document, e.g., the student’s graduate com- Committee: mittee. • Degree (e.g., Master of Science, Doctor of Philosophy) being earned. Degree:

3.2.2 Abstract

The abstract is an important element of an ETD, as it contains a summary of the work, typically about a page long. Its elements include: • Since many ETDs contain multiple abstracts, such as a technical Abstract Heading: abstract and general audience abstract, or an abstract in English as well as the original language, extracting the abstract heading makes it easier to segment, and could be helpful in categorizing the abstract by audience type. • The actual text of the abstract. Abstract Text:

3.2.3 List of Contents

The list of contents (also referred to as table of contents) of an ETD determines where different components are located based on their page numbers. This helps with accurately mapping the chapters and sections, as well as figures and tables, since they are generally included in the list of contents. This subcategory includes the following elements: • This helps identify the specific type of list (e.g., list of List of Contents Heading: chapters/sections, list of figures, list of tables). • This is the actual list of entries for this type of content. List of Contents Text:

3.2.4 Main Content

Chapters are one of the most important components of an ETD, as they contain detailed information about the research described in the document. This subcategory consists of elements that can typically be found in the chapters of an ETD. • The title of the chapter. Chapter Title: • Quite often, chapters themselves can be long. It may be desirable to have Section: further delimiters such as sectional headers. Hence, we include the section names (along with other identifiers such as numbers) which can be used for further splitting of the document. • The main textual content of the ETD. Paragraph: • This includes figures, charts, and other visual illustrations included in the doc- Figure: ument. • The text caption that describes the figure. Figure Caption: • The table element category. Table: • The text caption that describes the table. Table Caption:

• Mathematical equation. Equation: • Quite often, equations are numbered, which can be helpful in Equation Number: linking them to the list of equations that may be included in the document. • Algorithms, such as pseudo-code. Algorithm: • We separate footnotes from regular paragraphs, as they typically provide Footnote: auxiliary information which might be undesirable in many downstream tasks, such as summary generation. • Page numbers, which could be helpful in cross-referencing pages and Page Number: the objects contained therein to the list of contents.

3.2.5 Bibliography

We also include bibliographic elements in the list of objects. They are described below: • The header that indicates start of the references list. Reference Heading: • The actual list of references cited in the document. Reference Text: In our dataset, we regard appendices as chapters, since they contain many elements that are found in the main chapters. They can however, be easily differentiated from main chapters based on the title.

3.3 Dataset

In this section we introduce ETD-OD, an object detection dataset for layout analysis on scholarly long documents such as ETDs.

3.3.1 Dataset Source

The ETD-OD dataset consists of 25K page images from 200 theses and dissertations. These documents were downloaded from publicly accessible institutional repositories, and were uniformly sampled with regards to degree, domain, and institution. Since object detection requires images as the input data, the documents were split into page images using the Python library. These images were then used for annotation. pdf2image1

3.3.2 Annotation

We use Roboflow2 for annotating the page images in our dataset. Each annotation was done by one of the 6 undergraduate students, each of whom was a computer science student from junior year or above. Each data sample was further validated for correctness by two graduate students.

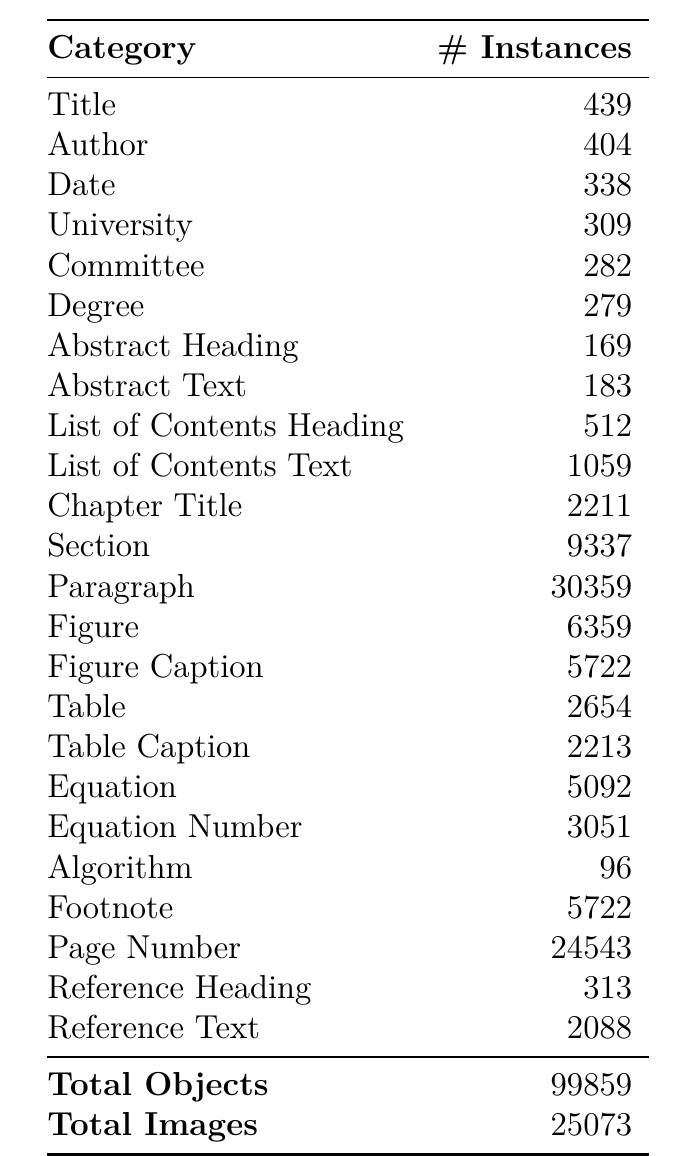

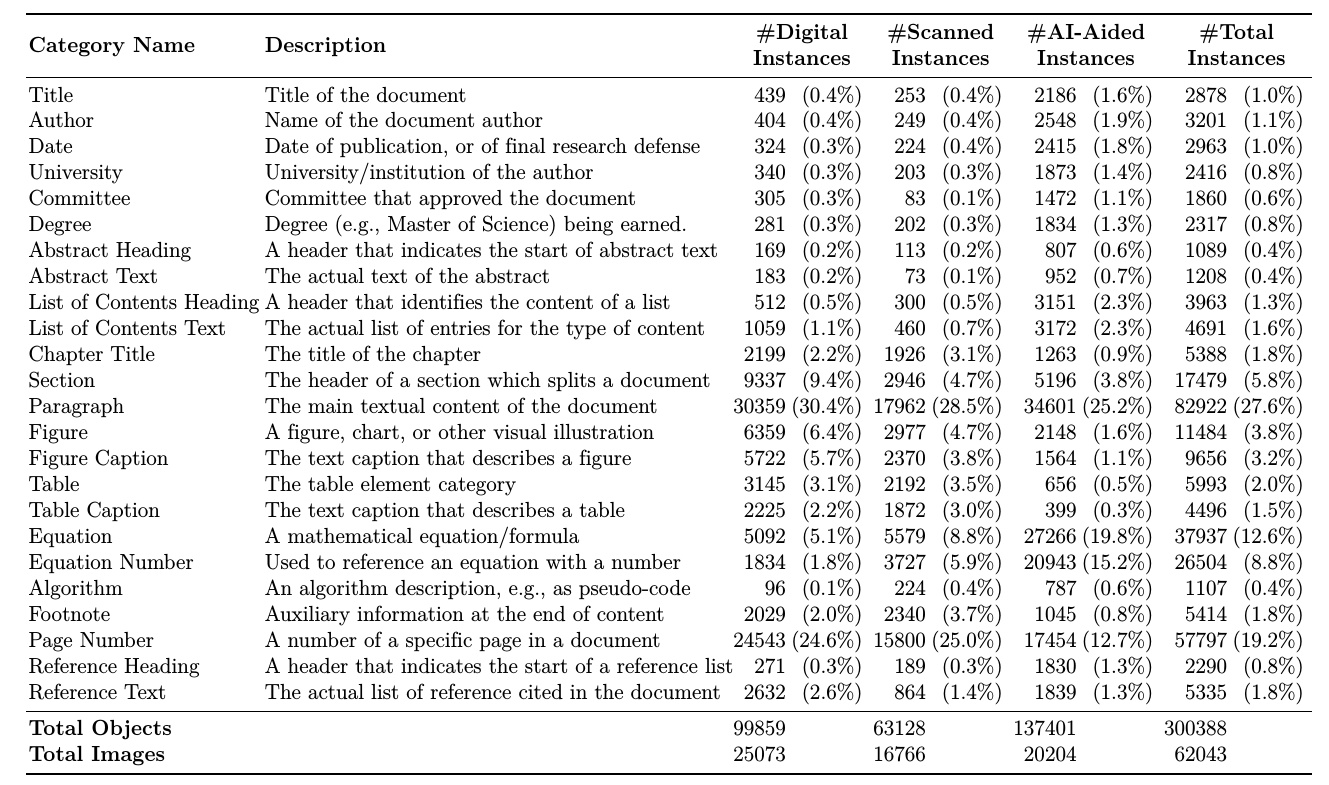

3.3.3 Dataset Statistics

Table 3.1 shows the detailed statistics for different object categories in our dataset. The dataset consists of page images and bounding boxes spanning across different ∼25K ∼100K object categories. Owing to the variation in the frequency of occurrence of various object categories in documents, some categories have many more samples as compared to others. Elements such as paragraphs can be found on most pages, and hence, it is the dominant category in our dataset. 80% of the images and their corresponding objects were used for training, while the remaining 20% were used as the validation set.

3.4 Proposed Framework

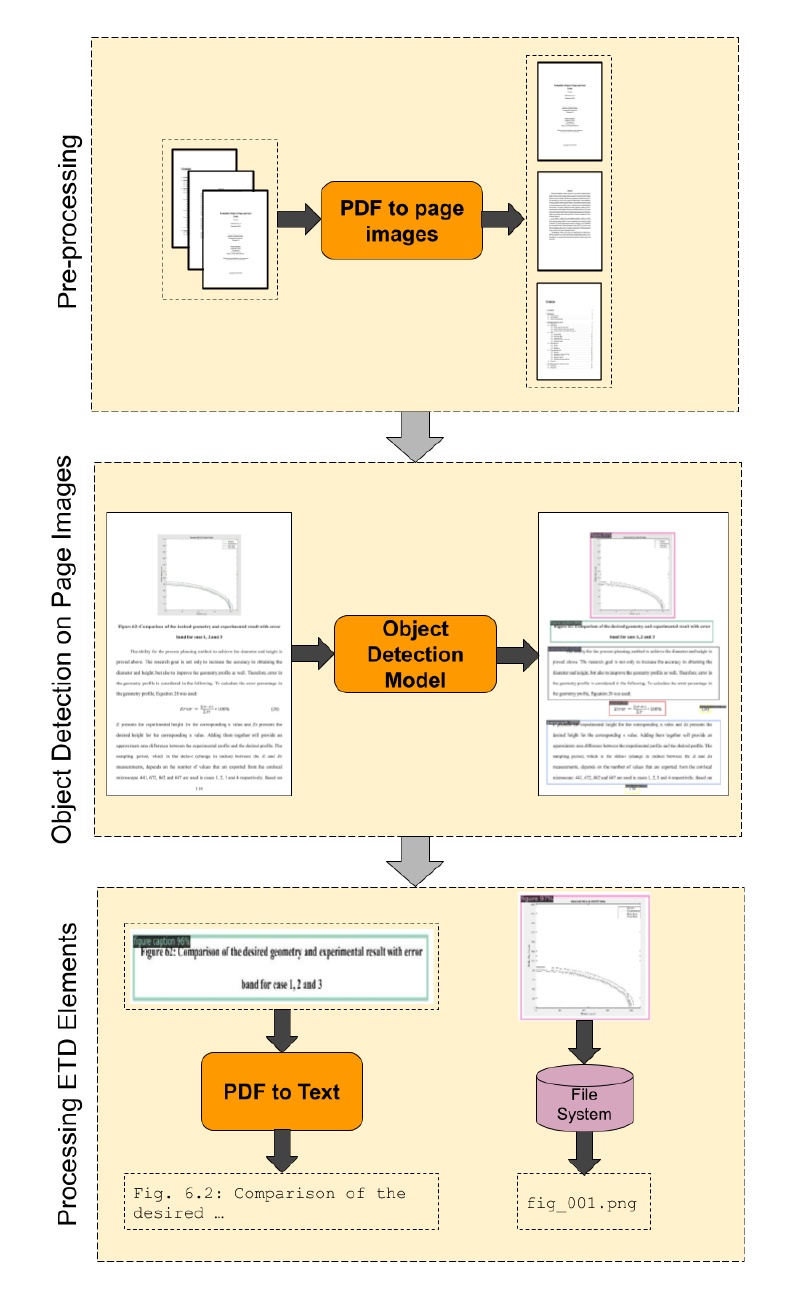

We now introduce the proposed framework for extracting important elements from an ETD by means of object detection. The architecture of our framework is illustrated in Figure 5.3. The different modules shown can broadly be divided into the following three categories.

3.4.1 Data and Preprocessing

Since our framework is primarily built for parsing long scholarly documents, it takes the PDF version of the document as input. The input file is converted to individual page images (.jpg format) using Python-based PDF libraries such as Next, the page images pdf2image. are individually fed to the Element Extraction module for further processing.

3.4.2 Element Extraction using Object Detection

This module forms the backbone of our system. It takes the individual page images as input, and uses an object detection model such as Faster-RCNN or YOLO for object detection. These models are first trained on the ETD-OD dataset. The specific details about training object detection models are included in later sections of this chapter. While using the object detection models as a part of this module, only inference is performed, and no updates are made to the model parameters. The output of object detection will be a list of elements, where each element contains information about the bounding boxes such as the coordinates, along with the category labels. This process is repeated for all of the pages in the document, and finally, a list of pages accompanied by their respective elements is populated. In some instances, the object detected by the model is classified as one belonging to a different, yet similar category. In such cases, we use certain post-processing rules to correct

the predictions. For example, being mis-classified as chapter heading is one abstract heading of the common errors, since both of these elements are often found in bigger font size at the beginning of a page. This can, however, be corrected by enforcing a constraint such as: a chapter heading in the first 10 pages with matching keyword “abstract” will be the abstract heading. We use a set of such rules for different object types to correct mis-classifications. This component is discussed in detail in Chapter 6.

3.4.3 Post-Processing Extracted Objects

After extracting all of the elements for all of the pages in the document, we regard the objects as broadly belonging to two types. The first type includes objects image-based such as figures, tables, algorithms, and equations, that need to be stored on the file system as an image. We regard tables as image-based objects even though they might contain text, since further extraction of information in structured format from tables is beyond the scope of this work. The second type of object includes elements such as paragraphs, text-based titles, etc., which need further processing to be converted to plain text. We regard all object categories excluding the image-based ones as textual elements. For converting text-based objects to plain text, we use off-the-shelf tools and libraries. Some PDF documents are born-digital, where the text can be easily extracted using Python li- braries such as based on page ID and bounding box coordinates. For scanned pymupdf3 documents we use optical character recognition (OCR) tools such as pytesseract4. For image-based elements, we record the path of the image that is cropped based on the coordinates. Figures and tables are mapped to their respective captions based on proximity. For any figure/table element, the caption object closest to them based on Euclidean distance

w.r.t. bounding box coordinates is assumed to be the caption. A similar method is followed to map equations with their equation numbers, with an added constraint that the y-coordinate of the center of the equation number should fall between min and max y-coordinates of the equation object.

3.5 Object Detection Training

We use the ETD-OD dataset introduced in this chapter for training object detection models for our framework. The models currently supported are: • [32]: Faster-RCNN is an object detection model that has two stages. A Faster-RCNN region proposal network generates regions of interest, which are fed to another network for final detection. We use the version of Faster-RCNN that uses ResNeXt-101 [44] as the backbone model. • [24]: Faster-RCNN (with ResNeXt-101 back- Faster-RCNN pre-trained on DocBank bone) is pre-trained on DocBank, and then fine-tuned on ETD-OD. Although DocBank does not include all of the elements found in ETDs, we hypothesize that the scholarly nature of documents used in pre-training should help improve the performance over the vanilla version of the model. • [18]: YOLO is a family of single stage object detection models that perform YOLOv5 the processes of localization and detection using a single end-to-end network. This im- proves the speed without any significant drop in performance. These models have shown impressive performance on various datasets [42]. • [40]: This is the most recent version of YOLO, which has been shown to YOLOv7 outperform many object detection models. Both of the Faster-RCNN models were trained on our dataset for 60K iterations with an

inference score threshold of 0.7. The models were based on the implementation included in the open-source detectron2 [43] framework. For the DocBank-pretrained version of the model, we used the original set of weights and configurations open-sourced by the authors. Both of the versions of YOLO were based on the open-source implementations, and were trained for 150 epochs.

3.6 Experimental Results

In this section, we discuss the results obtained in the experimental analysis of our work.

3.6.1 Evaluation Metrics

For the quantitative evaluation of object detection models, the commonly used metrics are average precision (AP) and mean average precision (mAP). AP is defined as the area under the precision-recall curve for a specific class. mAP is the average of AP values for all object classes. Both of these metrics have different versions based on the overlap threshold (also referred to as used for comparing the predicted object Intersection over Union or IoU) against ground truth. For example, in all of the objects with an intersection mAP@0.5, of 50% or more with the ground truth will be regarded as correct predictions. Another commonly used version of mAP is which is the average mAP over different mAP@0.5-0.95, thresholds, from 0.5 to 0.95 with step 0.05.

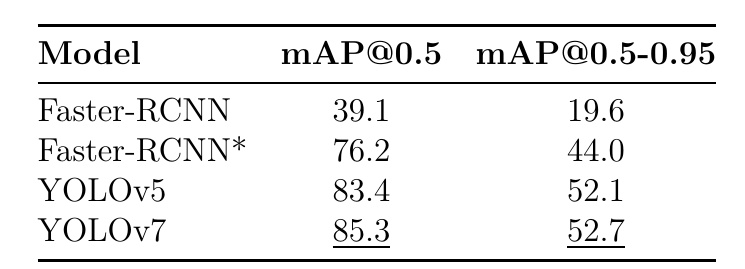

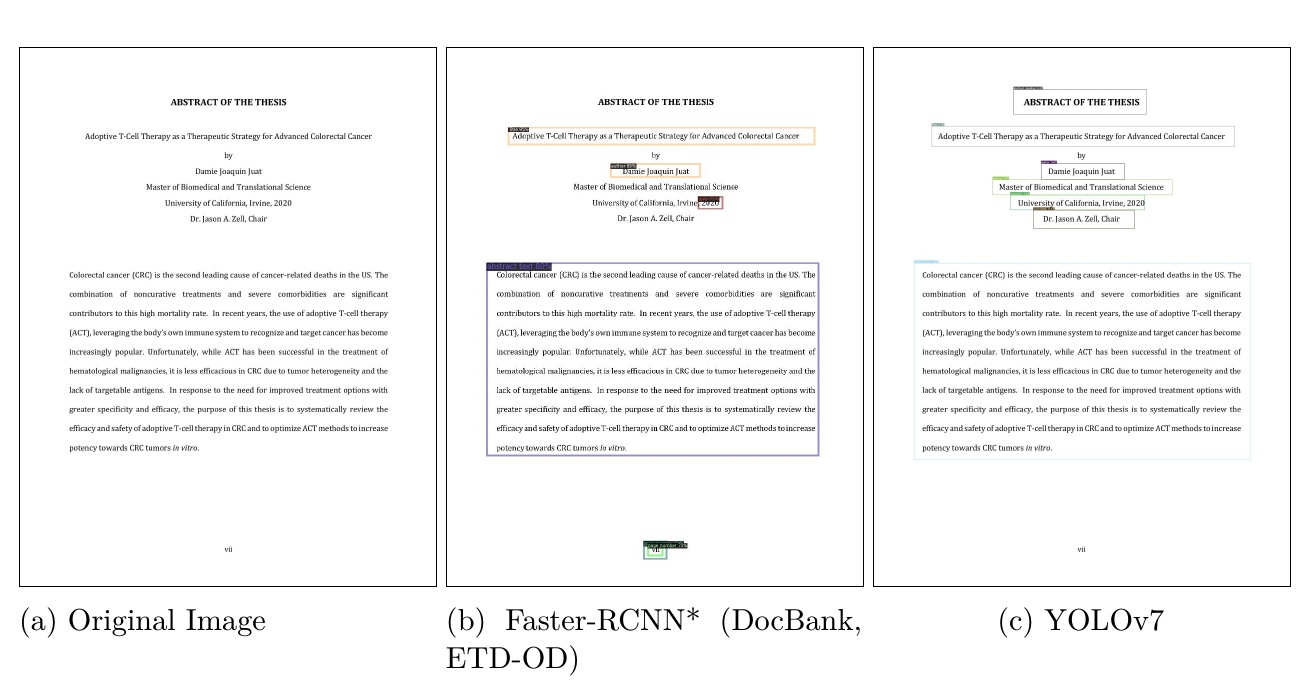

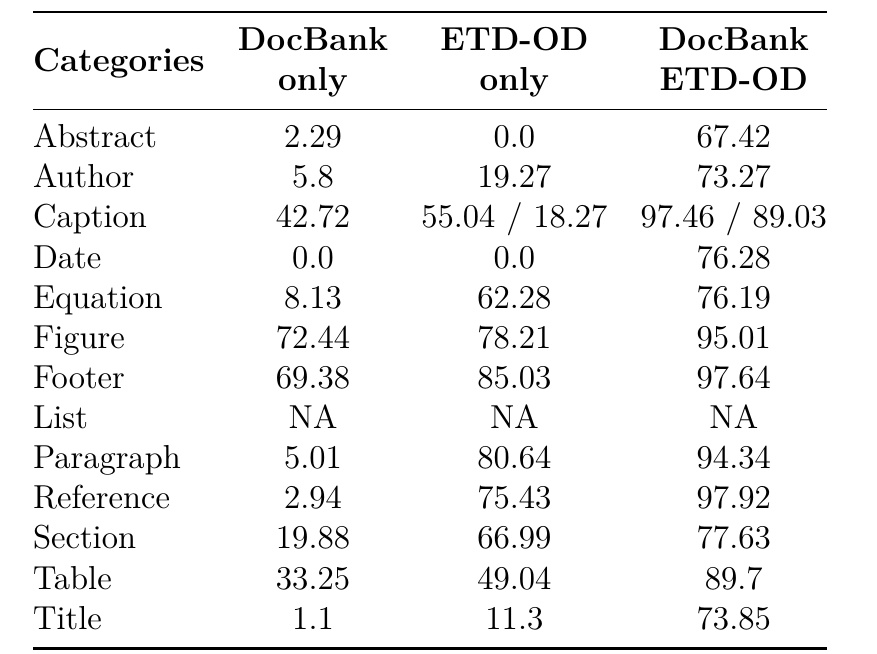

3.6.2 Analysis of Various Object Detection Models Trained on ETD-OD

Table 3.2 shows performance of different object detection models on the validation set of our dataset. The following observations can be made from the mAP values shown. • The basic Pre-training on scholarly documents improves model performance: version of Faster-RCNN without any pre-training on scholarly documents has the lowest performance among all the models. The same model, after pre-training on DocBank, and then fine-tuned on the ETD dataset, gives much better performance. Since DocBank also consists of scholarly documents, albeit of different type, the pre-training process exposes the model to a diverse dataset, which eventually results in better generalization and predictive performance. • YOLO models belong to the YOLO outperforms Faster-RCNN on ETD dataset: class of single stage detectors, which are designed with an emphasis on speed. YOLO typically performs worse than Faster-RCNN in scenarios where the objects are smaller or multiple objects are close to each other. However, in the case of documents, most objects are typically of large size and have minimal overlap with each other due to white spaces and line breaks around objects (such as between a header and paragraph). Hence, it outperforms Faster-RCNN on the ETD dataset.

3.6.3 Analysis of Detection Performance on Different Object Cat- egories

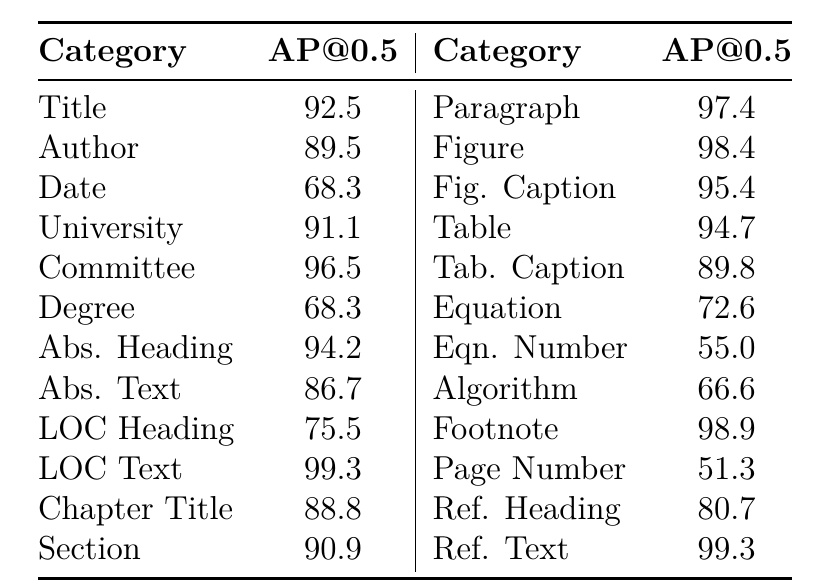

In Table 3.3, we show the performance of the best performing model (YOLOv7) on various object categories in our dataset. The lower performance of certain categories can generally be attributed to two reasons: • Elements such as degree, date, and algorithm Limited Number of Training Samples: have very few instances in our dataset. As such, the performance on these classes is lower than others. • Elements such as page number and equation number tend to Smaller Object Sizes: be of smaller size as compared to other elements. Since object detection models tend to struggle with localization of smaller objects, performance of such classes is impacted.

3.6.4 Comparison against Other Layout Detection Datasets

Chapter 4 Augmentation-Based Training for Layout Analysis Models

4.1 Chapter Overview

In Chapter 3, we introduced a dataset that can be used to train object detection models to extract scholarly elements from ETDs. While having high quality manually annotated datasets is an ideal method to train supervised machine learning methods, the high costs of manual annotation often restrict researchers from getting access to large datasets. Hence, there is a need to develop methods that can exploit the limited amount of manually annotated datasets to the highest capacity. One such method is data augmentation, which augments the existing training data curated for object detection training, by applying one or more augmentation steps to each training image, while utilizing the annotations of the original image. In this chapter, we explain an augmentation-based training approach for training object detection models. We used this approach to train layout analysis for ETDs, and experimental results show that augmentation-based training yields better performing models.

4.2 Image Augmentation

N We start by introducing data augmentation for images. We are given a set of images = ..., ..., and annotations where denotes the set of bounding box I bk {i1, iN} {b1, bN}, coordinates and the corresponding labels associated with image We also consider a set of ik. .., m < = image transformation functions and number of augmentation steps M. F {f1, fM} m For each image our image augmentation process first samples the transformation ik, functions from Each of these transformations is iteratively applied on the image to F. generate an augmented version of the While many different types of augmentations image^ik. have been proposed for images, for our setting we limit it to techniques that do not modify the underlying size or orientation of the image, but rather modify the visual aspects of the image. The derived image can thus use the annotation of the source image without any bk modifications. This process can be repeated multiple times, each time with a different value m of and the corresponding sample of augmentation steps, to generate multiple augmented versions of an image.

4.3 Types of Image Transformations

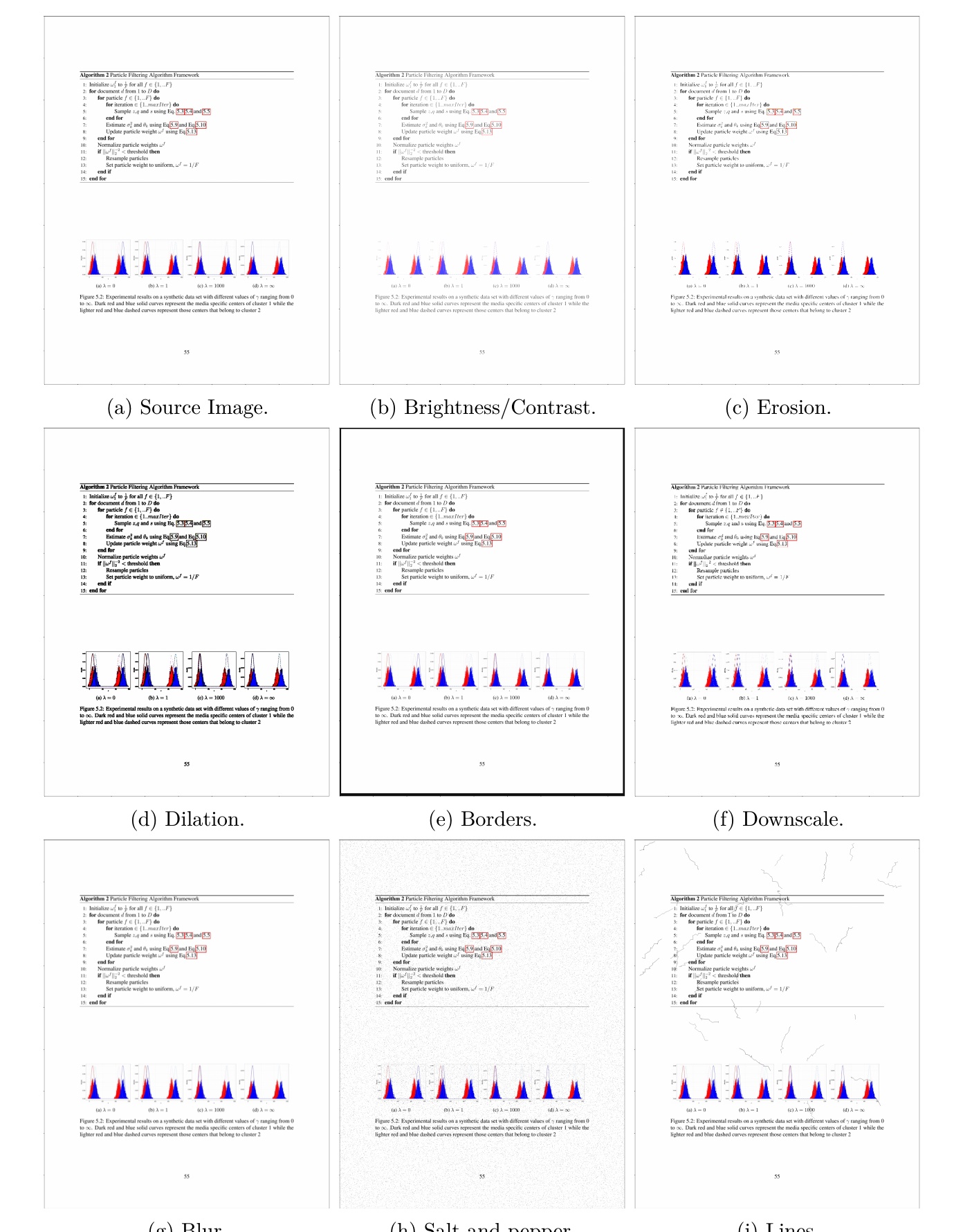

The different types of image transformations that we use to generate augmented dataset are discussed below. An example of a page along with each of its augmented versions is shown in Fig. 4.1. While the example here shows the versions generated by applying each of the image transformation individually, in practice, we apply a series of augmentation steps to generate harder samples. The augmented images thus generated are more likely to match real world distortions that can be found in scholarly documents.

4.3.1 Brightness and Contrast

This step supports modifying the brightness and contrast of the original image. Since schol- arly documents often contain multiple figures and tables, each with a varied range of colors, and can often be scanned, we hypothesize that models trained on images of varying bright- ness and contrast can be helpful.

4.3.2 Erosion

Many academic documents, especially the scanned ones, often contain eroded text, i.e., text with broken boundaries. Due to erosion, the elements lose their clarity. This transformation can allow models to better adapt to such examples.

4.3.3 Dilation

Like erosion, often times scanned documents may contain dilated text resulting from the process of scanning. Dilation happens an element expands, resulting in some objects being merged. To perform well on such cases, training on dilated versions can be helpful.

4.3.4 Borders

Many documents, when scanned, can contain borders resulting from the edges of binding. To allow object detection models to be able to identify such noise, training on border-augmented images can be helpful.

4.3.5 Downscale

Downscaling reduces the number of pixels in an image, thus reducing the sharpness of each object in the image.

4.3.6 Blur

Documents have a wide range of variance in terms of resolution. Training on blurred images can allow models to become more robust to such variance.

4.3.7 Salt and Pepper Noise

Noisy patches such as those resembling small dots of white/black colors like salt/pepper sprinkles are common in the case of scanned documents. This augmentation can be helpful to deal with such samples.

4.3.8 Random Lines

Another type of noise that is common in scanned documents is jagged lines, which are a result of the scanning process. To allow layout analysis on such documents, we include this augmentation.

4.4 Results

In this section, we discuss the experimental results obtained in our evaluation. We focus our evaluation on two aspects, as discussed below. For each setting below, we use the

ETD-OD dataset introduced in Chapter 3 as the original dataset. For each of the images in the training set, we generate 2 augmented versions, by applying up to 3 augmentation functions per augmented image. The number and type of augmentation functions is sampled individually for each generated image.

4.4.1 Models

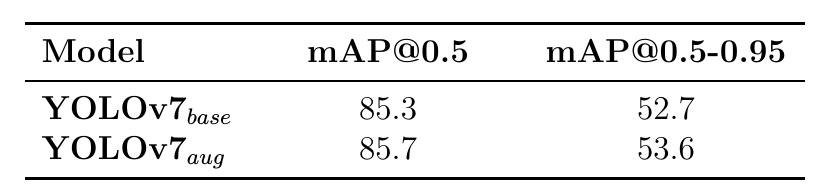

We use the following models for our experimental evaluation. Each setting uses YOLOv7 [40] as the object detection model, as this was the best performing object detection model on ETD-OD, as discussed in Chapter 3. • This is the version of YOLOv7 trained on the original object detection YOLOv7base: dataset. This model serves as the baseline model that has been trained without using any augmented data. • This is the version of YOLOv7 trained on the original object detection YOLOv7aug: dataset, along with the derived data consisting of 2 augmented versions per image. Due to the inclusion of the augmented dataset in training, the training dataset size becomes the dataset used in This is the model being evaluated for YOLOv7base. 3× augmentation-based testing.

4.4.2 Layout Detection of Digital ETDs

In this experiment, we want to determine if co-training on augmented images derived from digital ETDs along with original images can improve the performance of layout analysis on digital ETDs. Hence, we use the test split of ETD-OD as the evaluation dataset. We evaluate the performance each of the two models, i.e., and These YOLOv7base YOLOv7aug. results are shown in Table 4.1.

4.4.3 Layout Detection of Scanned ETDs

In this experiment, we evaluate if the augmentation-based training can be helpful in the layout analysis of scanned ETDs. Hence, we use the test split of the scanned images from ETD-ODv2 (introduced in Chapter 5) as the evaluation dataset. The result for each of the two models is shown in Table 4.2.

4.4.4 Analysis

Based on the results shown in Tables 4.1 and 4.2, it can be observed that i.e., YOLOv7aug, the model trained on augmented dataset alongside original dataset, outperforms the baseline model in both of the settings. The performance improvement on digital ETDs is marginal, which can be attributed to the fact that the validation set only consists of clean page images with limited distortions. Thus, the improved prediction capability of the model does not get tested in this setting. However, there is a significant performance improvement when tested on page images from scanned documents. Since scanned documents are more likely to contain distortions, obtaining good predictive performance requires the model to be robust

to such distortions. The model trained on augmented images is more likely to be robust to such distortions, which can be seen from the better performance of over the YOLOv7aug baseline model.

Chapter 5 AI-Aided Annotation for Developing Layout Analysis Datasets

5.1 Chapter Overview

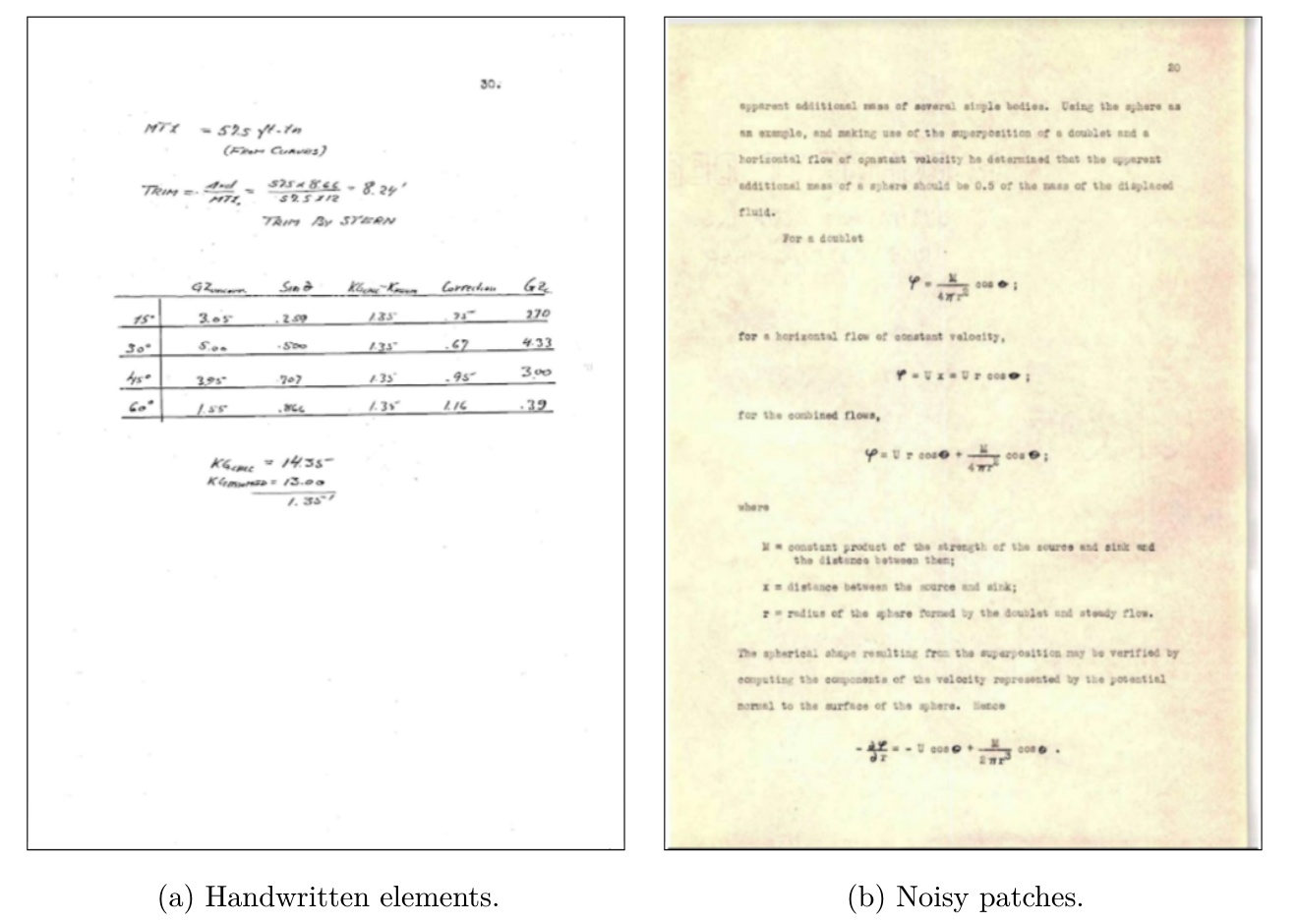

An important aspect of object detection-based methods is that they often require a huge amount of labeled training data. For digital documents, especially those written in LaTeX, it is often possible to obtain annotations using rule-based automatic annotation methods [24]. However, in the case of scanned documents, as well as digital documents without accompanying LaTeX source code, annotating data is a cumbersome process that requires a great amount of manual effort. In the case of ETDs, many documents present in dig- ital libraries, especially the older ones, tend to be scanned documents that were written using legacy text editing software or with a typewriter. These documents were then mi- crofilmed and/or scanned and converted to PDF. Consequently, these documents contain a large amount of noise that was introduced during the PDF conversion process, as shown in Figure 5.1. Furthermore, given that these documents were prepared using legacy methods, they differ significantly from newer documents, such as digital ETDs, in terms of layout and structure. Additionally, some of the elements, such as metadata elements like ETD title and author name, can only be found on a few pages, while others, such as a paragraph, can be found on many pages in a document. As such, the distribution of different object categories

in the training data varies. This also affects the performance of object detection models in classes with a limited number of training instances.

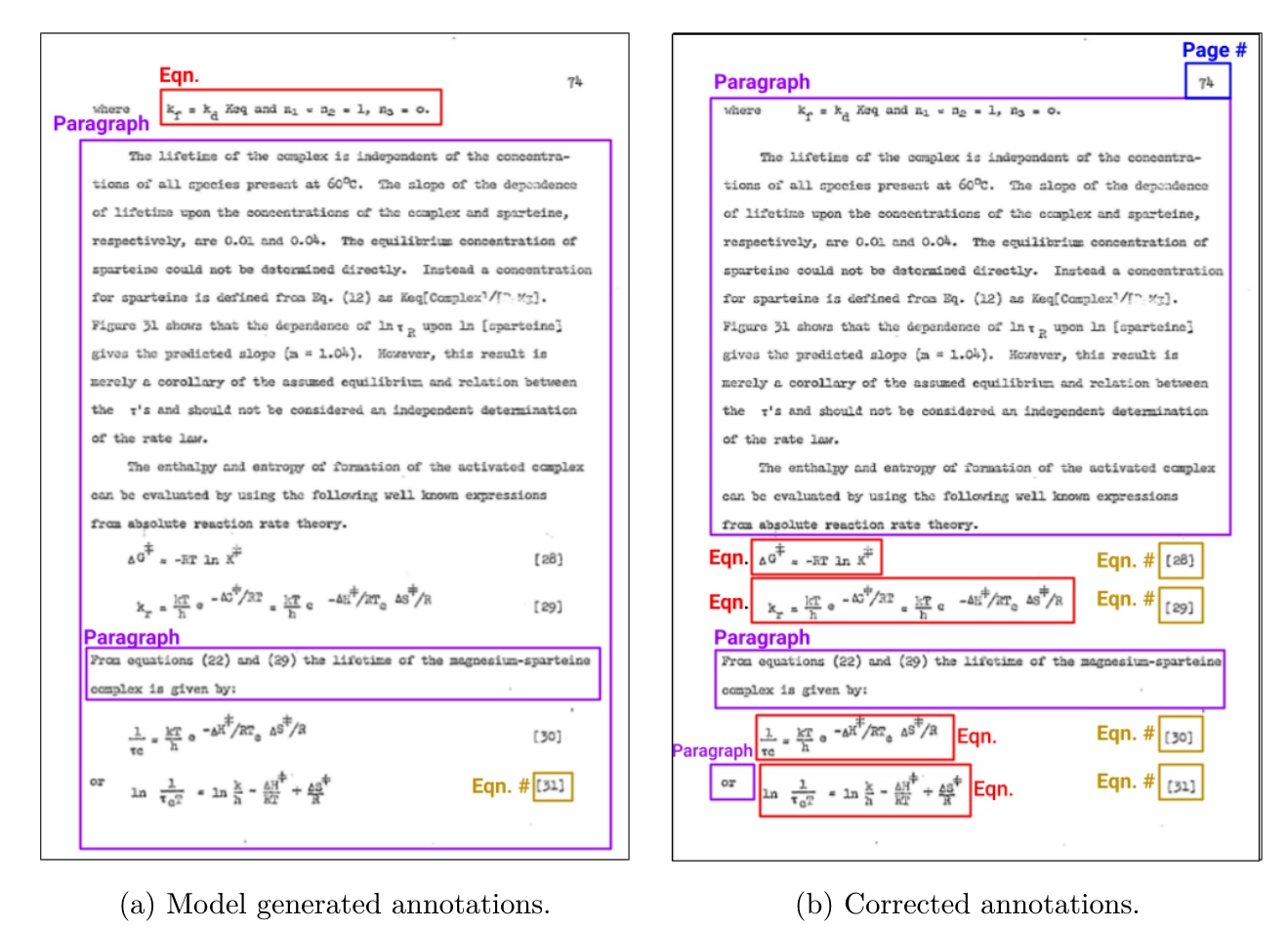

In this chapter, we propose an AI-aided annotation framework to minimize the amount of resources such as annotation time associated with developing training datasets for layout analysis. Our proposed framework utilizes the predictive capabilities of models trained on existing datasets to assist human annotators. As illustrated in Figure 5.2, although the annotations generated by the model might not all be correct, many of them are correct. Having humans only enter annotation corrections can reduce the number of instances that need to be manually labeled. This significantly speeds up the annotation process, without compromising the quality of the generated dataset. It also helps to address the problem of

class imbalance in object detection datasets, by guiding annotators to selectively label im- ages, e.g., those that are more likely to contain elements from a predefined set. Experimental results show that our proposed annotation scheme significantly reduces the annotation time and class imbalance, thus resulting in models with improved performance across the set of object classes. We also introduce ETD-ODv2, a new dataset for object detection-based lay- out analysis of long documents such as theses and dissertations. ETD-ODv2 supplements the page images included in ETD-OD, adding 20K page images originating from scanned theses and dissertations. It also adds annotations for page images that are likely to con-

tain low-frequency elements, such as and since they can only be document title algorithm, found on selected pages of a document, or in documents from specific domains (e.g., equa- tions in a physics work). These pages were sourced from a large corpus consisting of both scanned and digital documents, making them helpful for mitigating the class imbalance in existing datasets as well. ETD-ODv2 thus addresses the limitations of existing datasets for ETD layout analysis, whose scope is limited to digital documents only, and suffers from a class imbalance problem. Our experimental results show that models trained on our newly annotated dataset perform much better than those trained on other datasets.

5.2 Proposed AI-aided Annotation Scheme

Due to the resource-intensive nature of the dataset annotation process, labeled data for training supervised machine learning models are always scarce. However, unlabeled data are generally available in abundance. This is also the case with document layout analysis, where getting high-quality annotations for documents and their respective pages is not easy. How- ever, given the numerous documents that exist on the Internet and in digital libraries, many

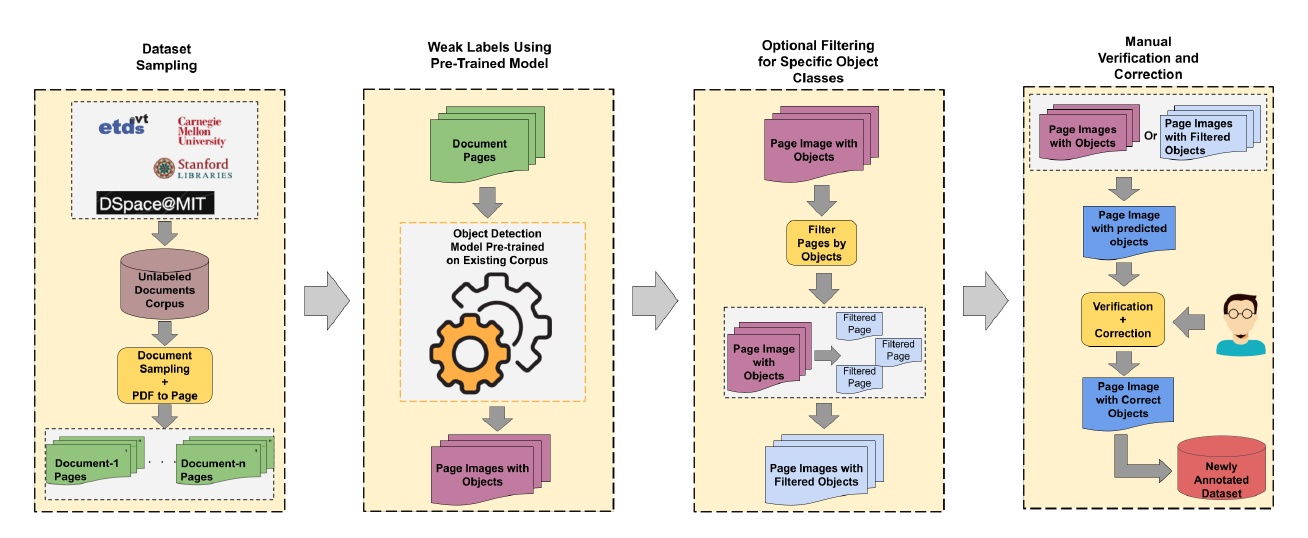

unlabeled scholarly documents are publicly available. Although labeling document page im- ages is a cumbersome task, we hypothesize that models trained on existing datasets can be used to assist human annotators in the labeling process, thus reducing the time required to annotate training datasets. These models can be used to generate weak labels for the huge corpus of unlabeled ETDs, which can then be filtered, validated, and corrected by human annotators. Based on this assumption, in this section, we propose an AI-aided annotation framework for developing datasets to train supervised object detection models. Figure 5.3 gives an overview of our proposed framework. The key components of this framework are discussed in detail below.

5.2.1 Dataset Sampling

We use a large corpus of unlabeled ETDs, sourced from multiple open access digital libraries. We first sample a set of documents from this unlabeled corpus that can be used for AI-aided annotation. Each of these documents is then split into page images, since object detection models require images as input.

5.2.2 Weak Labels Using Pre-Trained Model

Once we have a set of documents as well as their respective page images, they are sent to an object detection model such as YOLO [40] or Faster-RCNN [32] that has been pre-trained on an existing labeled dataset, such as ETD-OD [1]. The labels thus inferred for each image serve as weak annotations for further processing and manual verification/annotation.

5.2.3 Optional Filtering for Specific Object Classes

In some cases, such as in the case of academic documents like theses and dissertations, labeling the entire set of pages found in the sampled documents could result in a highly unbalanced dataset. In such cases, it might be desirable to use weak labels to identify images containing a pre-defined set of object categories. We refer to these object categories as objects of interest. These categories include minority classes, such as those containing very few instances in the labeled dataset, or those that have lower performance as compared to other categories. This could enable researchers to produce datasets with balanced class distributions.

5.2.4 Manual Verification and Correction

The filtered set of pages, along with their predicted bounding boxes and their respective labels, is then verified by human annotators for correctness. For page images with correctly predicted objects, no changes are made and the respective page is added to the verified dataset. For page images with incorrect predictions, whether in terms of missing or incorrect labels, the correct bounding boxes are drawn by human annotators before being added to the verified dataset. The new dataset can then be used to fine-tune existing pre-trained models or in combination with existing datasets for model training.

5.3 ETD-ODv2 Dataset

In this section, we introduce ETD-ODv2, a new dataset for layout analysis of electronic theses and dissertations. Although existing datasets like ETD-OD [1] can be helpful in

layout extraction from digital documents, they suffer from a class imbalance problem and do not contain scanned documents.

5.3.1 Scanned Documents

There are several attributes related to scanned documents that are not found in digital documents. These include the following. • A common observation found in scanned documents is that a large Noisy patches: number of pages contain noisy patches that result from the process of converting such documents into an electronically readable PDF file. • Given that these documents are essentially images of hard-copy versions Low resolution: of the original document, they tend to have relatively low resolution.

• Another common observation regarding many scanned docu- Dilated or eroded text: ments is that the text is eroded (i.e., has a thinner font than the original document) or dilated. This can also be attributed to the PDF conversion process. • Some of the pages of scanned documents contain elements – Handwritten elements: such as tables, figures, and equations – that were written or drawn by hand and were not typed or created using software. Due to the presence of such attributes, object detection models trained on the digital docu- ments dataset generally do not perform well on scanned documents. Hence, our new dataset includes manually annotated page images from scanned documents, to support layout anal- ysis on scanned documents.

5.3.2 Page Images with Minority Elements

While it is desirable to have images of pages from scanned documents, this does not prevent the dataset from being subject to a class imbalance problem. This is because some elements – such as and – typically only appear on a small set of pages in document title author name the document, such as the front page. Therefore, a dataset constructed by labeling all pages appearing in a document will always be prone to the class imbalance problem. Moreover, some element classes such as might only appear in documents in certain domains, algorithm such as computer science. Hence, a set of documents uniformly sampled from several different domains will have few pages with such instances. To alleviate this problem, we use the proposed AI-aided annotation method to identify/filter and annotate pages that are more likely to contain such minority elements. These page images were sourced from both digital and scanned documents. The elements that we consider to be minority elements are listed below.

• Title, Author, Date, University, Elements found on a limited number of pages: Committee, Degree, Abstract Text, List of Contents Heading. • Equation, Equation Num- Elements found in documents from select disciplines: ber, Algorithm, Reference Heading.

5.3.3 Dataset Source and Object Classes

To ensure compatibility with existing datasets, we use the object categories defined in ETD- OD for annotation. The documents in both subsets of our data set (i.e., the scanned and AI-aided) were sourced from a uniformly sampled set of theses and dissertations from open access institutional repositories of U.S. origin [39].

5.3.4 Dataset Statistics

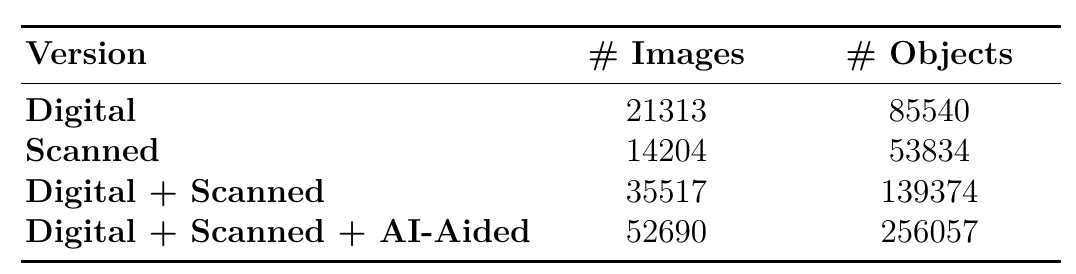

Table 5.1 shows the detailed statistics of different object categories in our dataset.

Scanned Documents

The subset of scanned documents in our dataset consists of images and bounding box an- notations of pages, derived from 100 theses and dissertations. These documents were ∼16K annotated by a group of five undergraduate students [49]. To ensure the correctness, each sample also went through another round of review by one of the authors of [2]. We use Roboflow1 as the dataset annotation platform.

Pages with low-frequency elements

Our dataset also consists of page images from documents that were annotated ∼20K ∼1,200 using our proposed AI-aided annotation framework. The pages were then filtered based on the labels listed above and reviewed and corrected as needed by a group of four annotators [11].

5.4 Experiments

In this section, we report the experimental results obtained during our evaluation. Our experiments focus on determining the improvements in terms of human resources, such as annotation time, obtained using the AI-aided annotation strategy. We also analyze whether the new dataset, consisting of scanned documents and pages with instances from lower- frequency categories, can be helpful in improving the performance of object detection models.

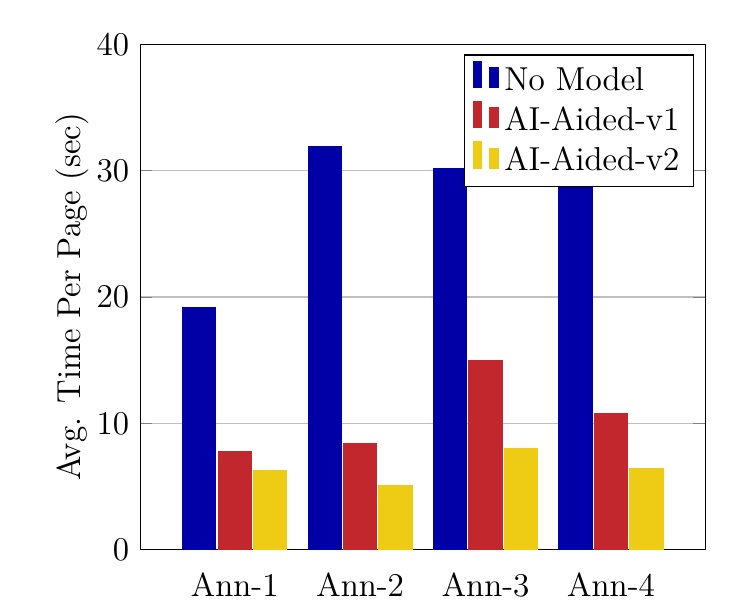

5.4.1 Annotation Time

Experimental Setup

To construct our proposed AI-aided annotation framework, we used the bounding box widget from the open source framework which was integrated with a pre-trained object pylabel2, detection model. We trained a YOLOv7 model [40] on ETD-OD [1] and a small set of ∼2K scanned documents. We only used a small number of samples from the scanned documents dataset, as that was the only sample available at the time. The model obtained was then used in our AI-aided framework to generate the proposed labels. We will refer to this model as

in the remainder of the discussion. As noted in [1], YOLOv7 outperforms YOLOv7_base other models in the object detection task, so we use it as the detection model for empirical evaluation.

Evaluation Settings

To determine whether the proposed AI-aided annotation scheme reduces resource require- ments, we compare the time required to label images under different settings. • This is the classical labeling setting under which the annotators No Model Assistance: are shown neither bounding boxes nor the respective labels for page images. • Under this setting, for each image, the annotators were shown the bound- AI-Aided-v1: ing boxes generated by the model. YOLOv7_base • For this setting, we fine-tuned the model on a set of AI-Aided-v2: YOLOv7_base 10K page images labeled using our AI-aided annotation scheme. This was done to eval- uate whether the assistance of a model trained on an additional new dataset affects the annotation time. We then used this model to generate bounding boxes for each image shown to the annotators. In the two AI-aided settings, annotators were asked first to review the model-generated annotations. All correct annotations were left unchanged, and only missing, incorrect, or extra-bounding boxes were asked to be modified. For each of the three settings, each of the four annotators annotated pages, and the time spent on annotation was recorded. ∼500

Results

In Figure 5.4, we report the average time spent per page by each of the annotators under different annotation settings. The following observations can be made:

• As we can observe from Model assistance significantly reduces annotation time: the graph, the average time required to annotate a page without the assistance of a model (i.e., without any proposed bounding boxes) is 2-3 times longer than for each of the AI-aided settings. This is likely because even though the models used for assisting annotators might have been trained on limited data and coverage (in terms of document types and object classes), they still possess predictive power to help with many of the elements found in pages, such as paragraphs and figures. Thus, we can conclude that the assistance of models trained on existing data significantly helps in annotating more data by reducing the time required for annotation. • Another observation that Model assistance increases with better trained models: can be made from Figure 5.4 is that as we obtain models with better predictive power, the suggested labels of the model become more accurate, further reducing the time required to annotate a page. The model used for the setting had been trained on AI-Aided-v2 10K more samples than the one used in setting. The samples used were AI-Aided-v1 also more balanced in terms of object classes. Therefore, it has better predictive power,

enabling it to be more helpful to human annotators.

5.4.2 Object Detection Performance

In this analysis, we present our findings on how the AI-aided annotated dataset helps improve object detection performance. The specific details of this analysis are described below.

Object Detection Model

As stated above, we use YOLOv7 as the benchmark object detection model for this analysis. Since the purpose of this analysis is to determine how training on different datasets impacts model performance, the specific choice of object detection model is beyond the scope of this analysis. Moreover, previous studies have shown that YOLOv7 is the state-of-the-art model for object detection tasks [1, 40, 42].

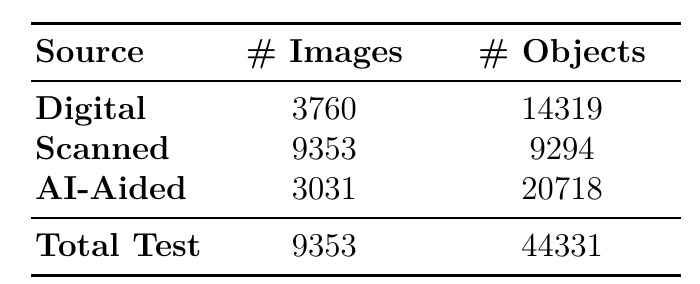

Test Dataset

Since the AI-aided subset of our dataset was constructed with the objective of mitigating the class imbalance problem, it consists of page images from documents of several types, such as scanned and digital. Therefore, to analyze how training with the AI-aided dataset helps object detection models on various types of documents, we construct a test dataset consisting of page images sampled from ETD-OD [1], as well as the scanned and low-frequency element pages from ETD-ODv2. This is done to ensure that the test set is representative of diversity in terms of both document types and object types. The breakdown of images and objects in the test dataset is shown in Table 5.2.

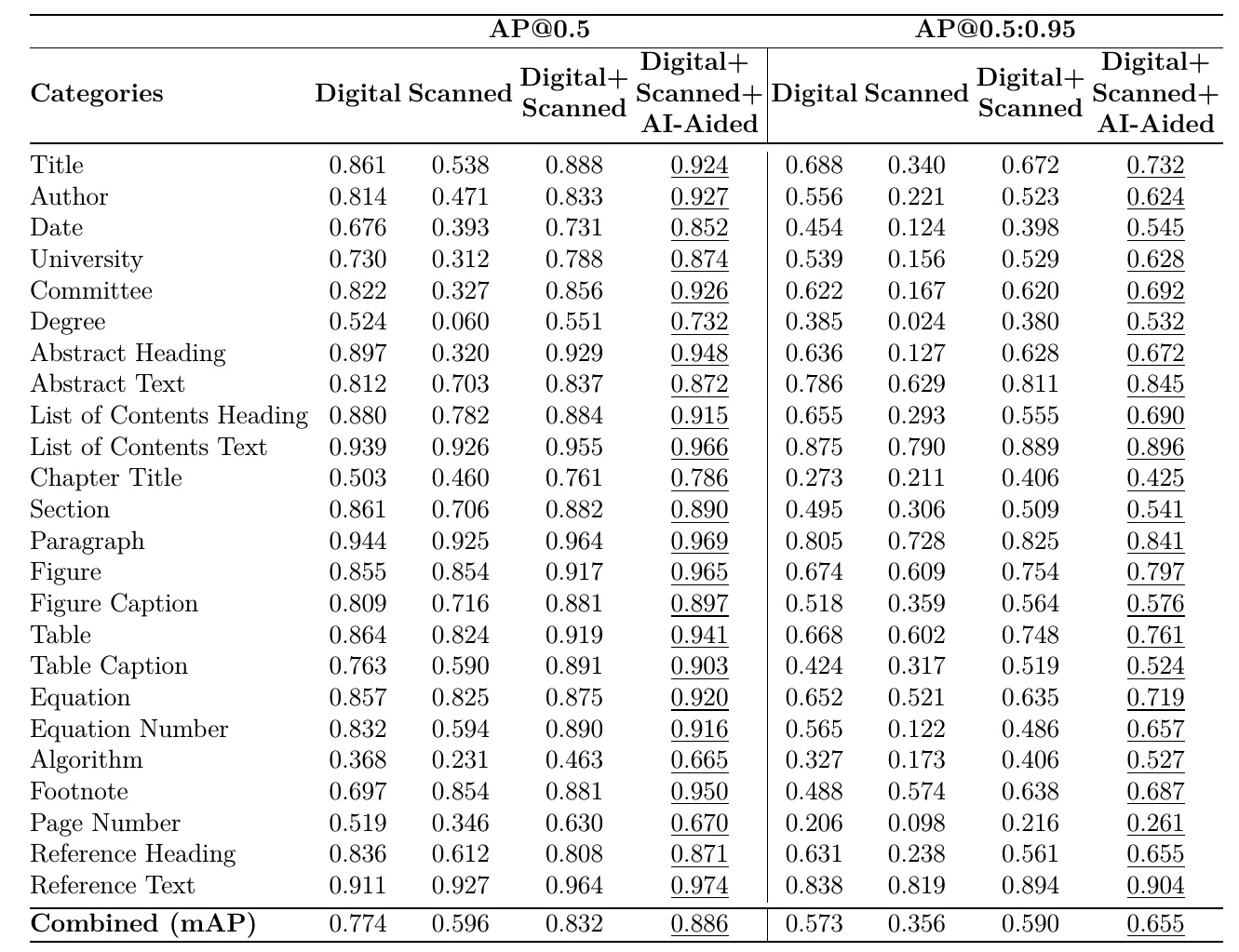

Baselines

We use the versions of the dataset listed below to evaluate object detection performance. All versions used YOLOv7 as the object detection model. The number of images and objects in each version is listed in Table 5.3. • This version of the model was trained only on the digital document images from Digital: ETD-OD. As such, the training dataset contained a small number of samples from the minority classes due to the class imbalance in the scanned subset. • This version of the model was trained only on the scanned subset of the ETD- Scanned: ODv2 dataset. As in the previous setting, the training dataset used in this setting also has the class imbalance problem. • Under this setting, the YOLOv7 model was trained on the com- Digital + Scanned: bined images of scanned and digital documents, that is, a merged set consisting of the two dataset splits described above.

• This setting uses the split Digital + Scanned + AI-Aided: Digital + Scanned described above, along with the AI-aided subset of ETD-ODv2. This setting represents a model that has been trained on diverse types of document (i.e., digital and scanned) and consists of a larger number of training instances from each object category.

Evaluation Metrics

We use the two commonly used object detection metrics to evaluate the results of different models discussed above. Both metrics are based on the average precision (AP), which is

calculated based on the number of predicted objects that overlap with the ground-truth object over a certain threshold in terms of the area. The two metrics are described in detail below. • For a given object category, AP@0.50 is the percentage of AP@0.50 / mAP@0.50: predicted bounding boxes that overlap with the true bounding boxes by more than 50% in terms of area. mAP@0.50 is the average of AP@0.50 for all object categories. • This is calculated by first calculating the AP at AP@0.50:0.95 / mAP@0.50:0.95: different thresholds, from 0.50 to 0.95, with a step of 0.05. All these AP values are averaged to compute AP@0.50:0.95 for an object category. mAP@0.50:0.95 is the average of AP@0.50:0.95 for all object categories.

Results

Table 5.4 shows the results obtained on the test dataset described above in each of the training settings. Based on the results shown, the following observations can be made. • The subset of images used to train the Performance w.r.t. document type: Scanned model had the highest amount of noise and lowest quality (e.g., blurred) as compared to the training dataset used for other models. This results in the lowest overall performance of the model. • The model was trained on the smallest training Size of the training dataset: Scanned dataset. Consequently, it has the lowest performance among all four variants. The large size of the training dataset used in helps achieve the Digital + Scanned + AI-Aided best overall performance. • We also find that training on a dataset with a Performance on minority classes: better distribution in terms of object classes significantly improves performance. As can

be seen from the results shown, the performance of certain categories, such as Degree and increased by This shows that model performance on certain low- Algorithm, ∼20%. performing categories can be improved by training on a larger number of samples from such categories. • Another observation Weak labels can be helpful signals for targeted annotation: that can be made from the performance improvements achieved on low-frequency cate- gories is that weak labels generated from an existing model can serve as a good indicator for more targeted annotation. Although using such labels cannot guarantee coverage, they can still address performance issues to a great extent. • Finally, we can also observe that performance improvements Overall performance: are achieved in other categories that were not included in the filter set. This can be attributed to the fact that while the AI-Aided data consisted of pages filtered based on the occurrence of minority elements, these pages also contained other elements in addition to those from the filter set. This helped the model to be trained on more samples from other object categories as well, thus improving the performance across all object classes.

Chapter 6 Structured Representations of Long Scholarly Documents

6.1 Chapter Overview

In Chapters 3, 4, and 5, we discussed how object detection can be used to detect and extract important scholarly elements from long documents such as ETDs. However, the scope of these chapters was limited to extracting elements from the document pages. In reality, a long PDF document such as an ETD consists of many pages, each of which contributes to the overall organization of the document, which can be represented as a hierarchical structure. Converting the of objects extracted from layout parsing methods “unordered set” to a structured format which can represent the organization of information in an ETD can be very helpful to support downstream tasks such as document/figure search, chapter summarization, etc. The structured versions can also be used to support accessibility needs of those with disabilities, by means of accessibility tools such as on-screen readers. However, generating structured versions of ETDs is a non-trivial task, and involves several challenges, as discussed below: • Delimiters, such as chapter and section elements, are one of Identifying delimiters: the most important components of the information structure of an ETD. The inherently long nature of ETDs makes correct identification of delimiters an important component

in ETD parsing. They are useful in segmenting the document into multiple smaller components, thus making it easier for the reader. They are also useful in downstream tasks that rely on segmented units of a long document, such as chapter summarization. • Many object types have relationships between each other, and Linking objects: correct identification of such relationships can be useful in several downstream tasks. For example, linking figures and/or tables to the respective captions can be useful in figure/table search. As such, identifying such relationships is important during information extraction from scholarly documents. In this chapter, we address the task of converting the extracted set of elements to a structured format, such as XML, so that the information in a document can be made useful for other downstream tasks. We also present a system that can allow for easy navigation of a long PDF document, using the information from the generated XML format.

6.2 XML Schema

Based on the structure of an ETD, in Schema 6.1, we present an XML schema that can be used to capture the organization of content in an ETD in a structured format. The schema is based on the following observations. • The overall information in an ETD can broadly be encapsulated into three high-level categories. consists of elements that can give key identifiable information, as well front as an overall summary about the work. These include metadata elements, abstract, and lists(s) of contents, figures, and tables. • consists of elements that can give in-depth information about the content of a body document. It contains a list of chapters, each of which further contains a list of sections. The sections encapsulate detailed informational elements contained therein.

• consists of information that often is not critical for the understanding of a docu- back ment. This includes a list of references and the appendices.

6.3 XML Generation

As discussed earlier, two challenges hinder the process of converting a PDF and its respective objects from each of the pages into the XML format shown above. We will address these challenges by observing the errors found in a uniformly sampled set of documents, and then formulating a set of rules derived based on domain expertise regarding document structure.

6.3.1 Identifying Delimiters

We discuss some of the commonly found errors in delimiters below. While the list is not exhaustive and might not cover all possible errors, it is based on a user study of a sample consisting of 25 ETDs by 2 undergraduate students from Virginia Tech’s course CS 4624 (Multimedia, Hypertext, and Information Access) in Spring 2023. • Last line of a paragraph on a new page being detected as a chapter heading. Error: Many chapter headings in ETDs appear as first line of a document, and are Reason: only a few words (less than a line) long. The last line of a paragraph resembles such chapter headings. Proposed Rules: Chapter headings that do not start with a capital case letter are re-labeled as – paragraph. The last paragraph of the previous page should have its last character as an end – punctuation. • Chapter headings in headers and footers being regarded as start of new chap- Error: ters. Many documents contain contain headers and footers on every page, which Reason: contains the title of the current chapters. Due to similarities between such elements and the actual chapter title, such as the presence of “chapter” keyword, the model might regard them as chapter titles. When identifying a new instance of a element in the Proposed Rules: chapter parser, ensure that the title of the new chapter differs from the previous chapter. Some other rules that are applied to chapter elements include: • For all the detected chapters, we check for their Presence in Table of Contents:

existence in the table of contents. A list of all the entries from the table of contents is extracted, and then each of the elements is checked against this set of entries. The matching is done using fuzzy string matching, to make sure the chapter titles overlap with at least one table of contents entry, with similarity above a certain threshold. This threshold will be derived empirically. Additionally, since we also detect the page as one of the objects, we can match the page number in the table of contents number entry against the chapter title and its detected page number as a further validation step. • Chapter titles often appear on the top of the page. Based on Location in the page: this observation, we can filter out all the chapter titles that occur in the first half of the page based on the y-coordinate of the tentatively detected chapter titles. While such rules may be helpful in fixing incorrect predictions, their scope is limited to false positive predictions only. This means that the rules cannot help us identify the objects that were not detected by the object detection method. Those objects can only be identified by a better object detection model, and we leave that as future work.

6.3.2 Linking Figures and Tables with their Captions

For each of the elements types (e.g., figures and tables) that need to be linked with their captions, we first identify the order of element and their caption. Some documents may contain a caption below the figures, while others might contain captions above. The same also applies to tables. Hence, for each document, we iterate through all the detected figures and count the number of figures that have a caption above them, and the number of figures that have a caption below. Based on the maximum of the two numbers, we determine the order of figures and their captions. The same process is followed for tables to determine the

table-caption order. Next, for each figure and table, based on the determined order, we find the nearest corre- sponding caption element. A special case is figures that have captions on different pages. A methodology to link such figures with their captions would be a direction for future work in this domain.

6.3.3 Linking Equations and Equation Numbers

Equation elements are linked to the nearest equation number elements based on the y- coordinate.

6.4 PDF to HTML Browser for Improved Accessibility

In addition to generating structured representations of the entire PDF using objects detected from individual page images, wedevelop a working system that allows users to view ETDs in an accessible format. The system allows users to upload the document of their preference and then view it in web-based UI. This system is built as a Flask application, which first generates the structured version of a document based on the XML format shown earlier, and then displays the document in the browser. This system offers multiple use-cases, as listed below.

6.4.1 User-friendly View of Long Documents

One of the well-known problems of ETDs is that they are inherently long documents, and navigating them is hard. Some existing studies [41] have shown that allowing users to be

able to read long PDF documents in a web-based application is helpful and can improve the readability of such documents. By allowing users to view a long ETD in a web-based application, we expect increased usage and adoption of such documents by researchers.

6.4.2 Improved Accessibility for Those with Disabilities

A common limitation of PDF documents is their limited compatibility with accessibility tools such as on-screen readers. This is crucial for users with special needs, such as those with blindness, as such users often rely on accessibility tools for access to knowledge. In recent years, tools such as PREP1 have been developed, to allow with tagging PDFs to make them compatible with on-screen readers. However, based on our analysis, it was found that automatic tagging feature of PREP does not work well in the case of ETDs, thus limiting the usability of such documents by users with accessibility needs. On the other hand, HTML based applications can be very well integrated with on-screen readers.

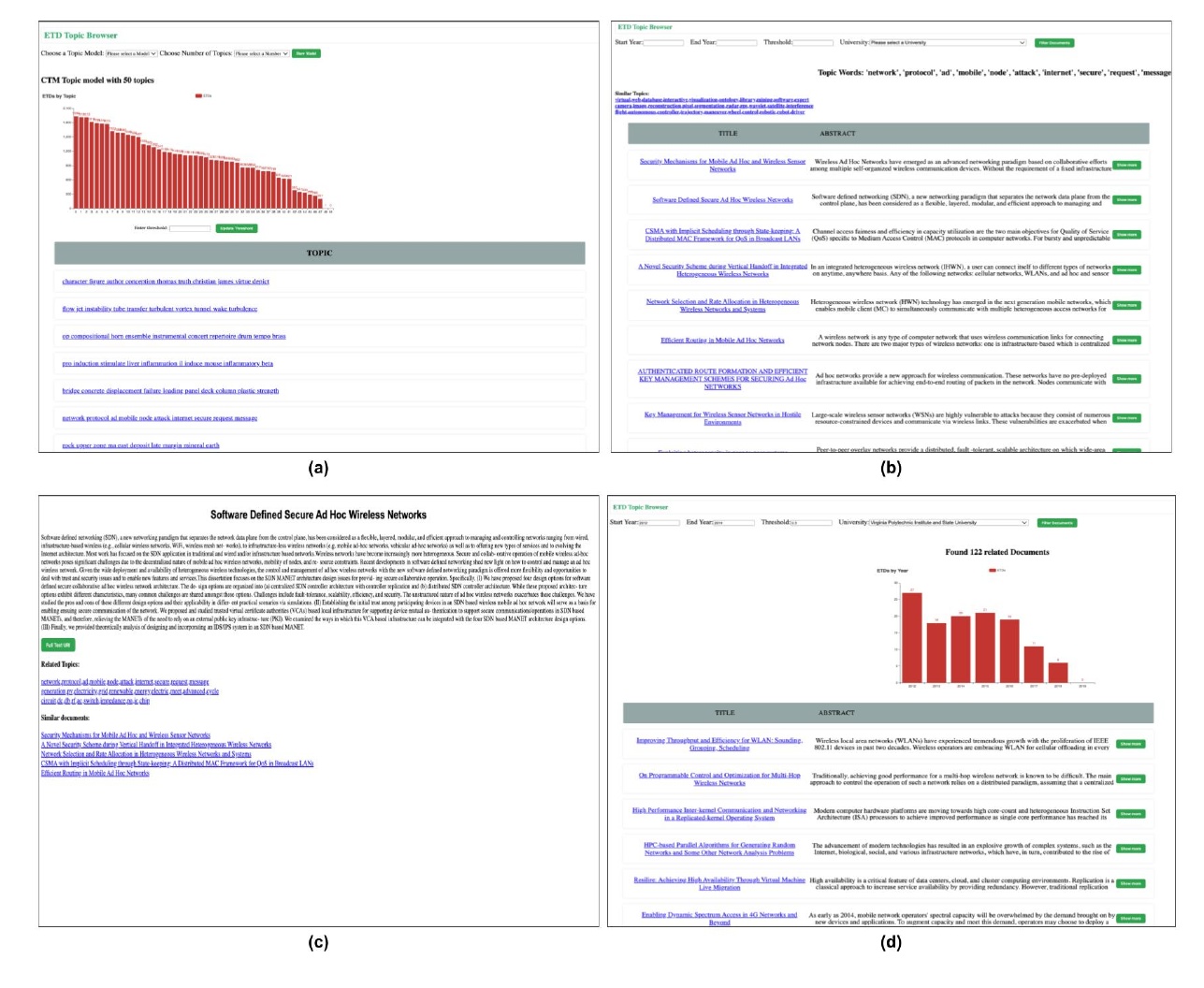

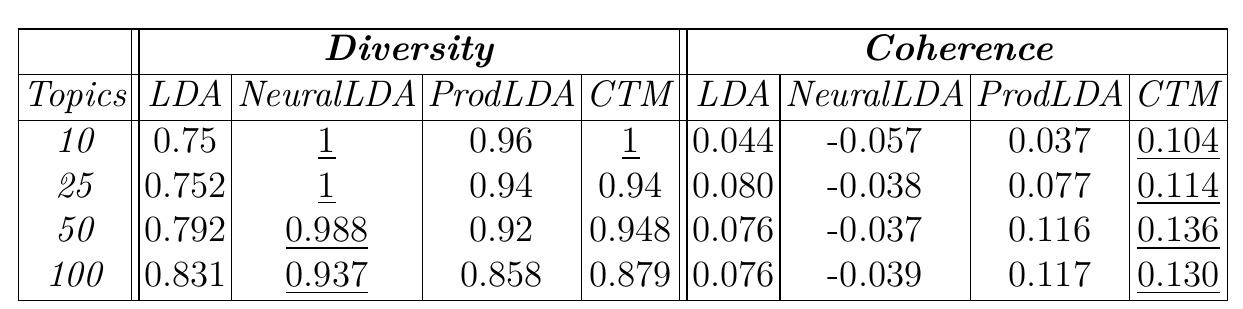

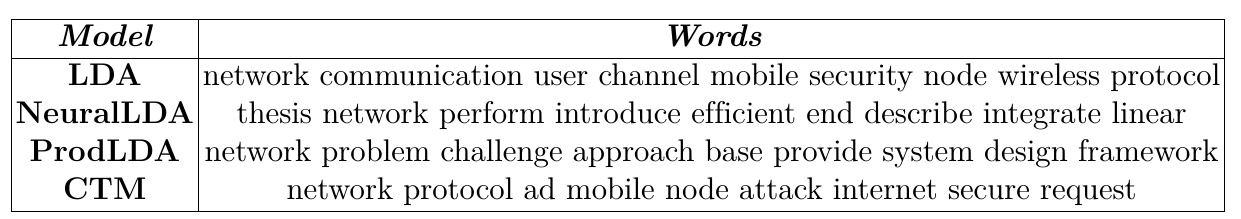

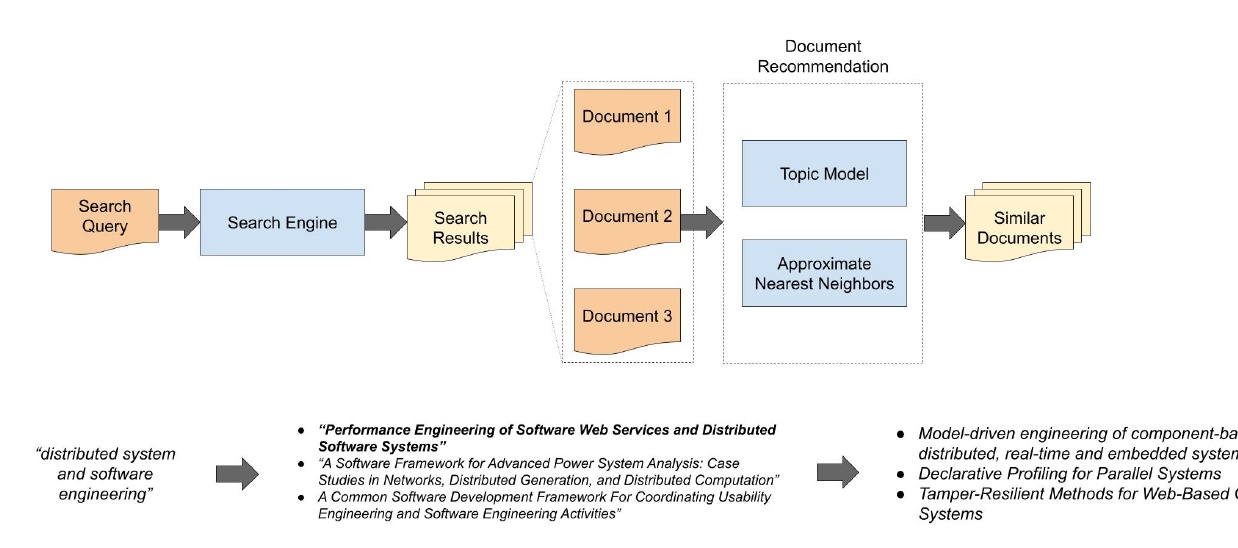

6.5 System Design